Each year, many code editors are released or upgraded, making it difficult for developers to pick one. Choosing the right code editor is necessary for your work efficiency and creativity, making this decision even trickier. Many of us might be sticking to the same old code editor one of our bosses or seniors suggested.

But, code editors evolve quickly with new features and upgrades. Therefore, you should always look for the best code editors in the market and adapt to change. The main aspects to look out for in a code editor are speed, flexibility, and user interface.

If you don’t know much about code editors, you might end up with a terrible editor that might cause problems in the long run.

Look at this in-depth guide about the 5 best code editors for programmers and developers to make a wise decision. There’s a bonus for you in the end!

What is a Code Editor?

As a key tool for programmers, a code editor serves as a coding interface for compiling and storing computer code as it is written. In essence, all computer programs are software created using source codes. This source code is inserted into a computer with the help of a source code editor application.

All code editors have various features and cater to a particular programming language. It includes instructions, functions, loops, declarations, and other statements that support developers in curating codes. Formatting checks and copy-paste functions are also part of most code editors.

In this way, the code can be written according to the particularities of the programming language. Programmers may even use a plain text editor to write, edit, and compile source code, especially if they are beginners.

Plain text editors have a simple interface with basic copy-and-paste capabilities and can be used to edit all kinds of programming codes. Programmers that prefer non-standard platforms generally utilize this tool.

Pros

- Highly customizable

- Massive plugins support

- Can support syntax folding and syntax highlighting

- Supports auto-correction and auto-completion of words and functions

- The majority of them are open-source and available for free

- Contain excellent UI and UX

- Easy to install and use

- Support split editing

- Suitable for many programming languages

Cons

- Code isn’t easy to navigate

- Debugging the code is difficult

- Many code editors don’t show hints for syntax errors

What is a WYSIWYG Editor?

WYSIWYG stands for “What you see is what you get.” It usually refers to a platform where you can drag and drop visuals and web components to design a website’s front and back end. WYSIWYG editors have made it extremely easy to design and edit the website if you want to bring some upgrades during or after the completion of the designing process.

To be more precise, WYSIWYG editors provide you with the same website at the end, which you can see while dragging and dropping visuals. These days, more than 60% of websites are being designed by drag and drop layouts in WYSIWYG editors.

Moreover, the post editor of WordPress also works on the WYSIWYG mechanism because the final layout of the website is the same as the layout you saw while editing the website. The majority of the WYSIWYG editor is free and open-source. However, you will have to pay some amount to use heavy-duty WYSIWYG editors.

Moreover, you can directly validate the code into the console in WYSIWYG editors instead of manually copy-pasting the files to validate.

Microsoft Frontpage was one of the first WYSIWYG editor with a rich interface, it was released in the mid 90’s. After that Dreamweaver came into being in the late 90’s with massive upgrades over the MS Frontpage.

Pros

- Easy to install

- Lightweight and quick

- Suitable for beginners

- You can design a site with only basic coding skills

- It helps you learn HTML

- It helps to make changes in the context

- It can run on an outdated PC

- Easy to use

- It helps you to web publish quickly

Cons

- The file size produced is large

- It helps with document structure, not document design

- It doesn’t help with the SEO of web pages

- Not suitable for heavy HTML projects

Why Code Editors Were Created? — A Little Background

To understand the modern code editors properly and why they were created, it is necessary to look at their history and where they come from. All earlier codes and programs were written into punched cards, which were made by a mechanism that had a keyboard and punched holes in empty sheets of thin cardboard for the 1’s (and no punch meant 0).

Here is an IBM punch card machine from the early 1970s:

However, this method of writing and editing code was too time-consuming and challenging. Therefore, researchers kept looking for an easy way to write and edit code directly into the computer, and this is why code editors were created.

One of the first code editors was released in 1998 when Macromedia came up with the famous Dreamweaver 1.0 code editor for the first time.

This code editor was mainly developed for front-end development, and the users could toggle between text and WYSIWYG mode in the editor. The Dreamweaver editor was initially launched for Mac OS, but a Windows edition was released in March 1998.

Then came some other code editors like TextMate, Text Wrangler and VIM to transform the coding world and bring us to where we stand today with some of the most advanced code editors.

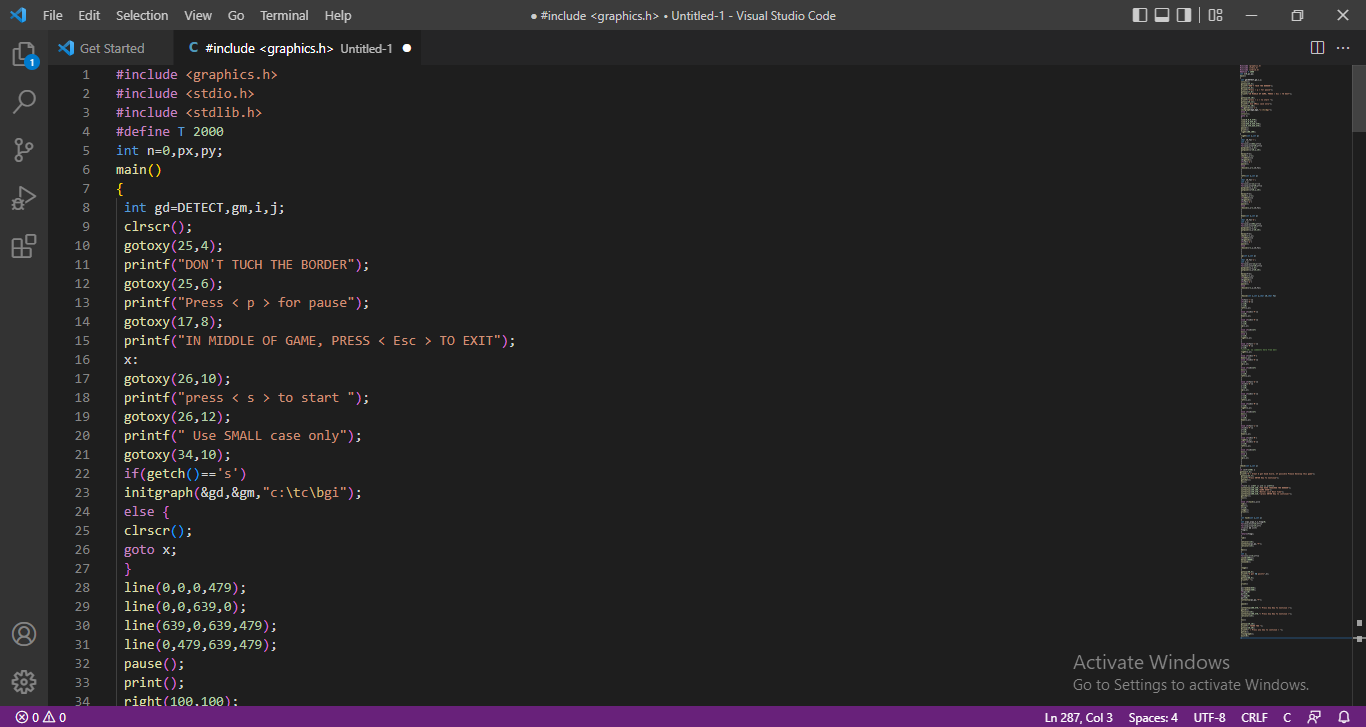

#1 Visual Studio Code — Best Code Editor for both Beginners and Experts

Visual studio code is an open-source and free code editor made by Microsoft. VSC is robust and lightweight, and it can run even on lower-end Windows, Mac, and Linux devices. It was introduced with integrated support for front-end programming languages, including Javascript, typescript, and Node.js.

Moreover, VSC also has a suitable development environment for other programming languages, including Java, Python, C++, C#, PHP, and Go. It also supports several runtimes, including .NET and Unity.

Installation link: Download

History

Microsoft unveiled Visual Studio Code at the 2015 Build conference on April 29, 2015. Soon afterward, a beta version was launched. This beta version was just for trial, and it wasn’t so effective in compilation and debugging.

Visual Studio Code’s source code was published under the License Agreement, and programmers were given access on GitHub on November 18, 2015. After passing the beta testing, the full version of Visual Studio Code was launched on the internet on April 14, 2016.

The whole of Visual Studio Code’s program code is available on GitHub under the open MIT License, but Microsoft’s versions are proprietary free software.

Reasons to Use

Easy Editing, Building, and Debugging

Visual studio code is a fast source code editor perfect for everyday use. Syntax marking, bracket-matching, auto-indentation, box-selection, variable assignment, snippets, and sorting are all available in VS Code. Moreover, it supports dozens of programming languages. Thanks to its simple shortcut keys and configuration, you can quickly build, edit, and browse your code.

Massive Plugins Support

VSC is an open-source program where you can personalize every function as per your preference and add as many third-party plugins. The plugin support helps you add functions of your choice to meet your end needs.

Suitable for Both Front-End and Back-End Development

VS Code has enhanced built-in functionality for Node.js coding, including JavaScript and TypeScript, and is driven by the same core tech as Visual Studio. More tools and languages like JSX/React, HTML, CSS, SCSS, Less, and JSON are all well-represented in VS Code. This is why VS Code is one of the favorite editors of both front-end and back-end developers.

Easy to Use and Robust Architecture

Visual Studio Code blends the greatest of internet, native, and language-specific solutions in its robust architecture. VS Code uses Electron to mix web tools like JavaScript and Node.js with native app performance and overall efficiency. Moreover, the easy-to-use interface, compilation, and debugging methods of VS code are also helpful for programmers and developers.

Open-Source and Free of Cost

Visual studio code is an open-source code e, and this means that you can help the owners to make this editor better. Moreover, it is also free of cost, unlike some other code editors with the same plugins and features.

Reasons to Avoid

- Heavy Memory Consumption: Being an electron application, Visual studio code uses more memory in both RAM and CPU, causing the CPU and processor to die a slow death and become weak over time.

- Hard to Disable plugins: Some of the plugins like telemetry are very hard to disable and might cause the VS code to crash.

Supported Plugins

Bracket Pair Colorizer

This VS code plugin matches similar lines and brackets in your code with the same color. When dealing with nested and stacked components, classes, or methods, they all contain brackets and parentheses. This helps to edit and debug the code efficiently and quickly.

Settings Sync

This plugin helps you save all your VS code settings, extensions, and preferences on Github. This helps you to keep your settings safe even if there are some issues with your code editor

SVG Viewer

This plugin helps you view SVG files as images in the code editor instead of just text.

Better Comments

Better comments help you write human-understandable comments in different parts of the code. This plugin comes in handy when multiple people work on the same code and need to collaborate.

Double slash (//) is used to add comments in the code. The compiler and debugger can not read these comments, so they don’t affect the compiling speed and efficiency of the code.

Import Costs

The import costs plugin comes in handy when working on a powerful front-end or node project. When you have to call a node module, this extension will show the size of the module to help you determine which one is better.

To-Do Highlight

This plugin highlights any to-do style comments so they will be easy to navigate during the final review.

Icons

You may use Icons to add helpful icons to distinguish between files and directories. This makes your code more accessible, making it easy to collaborate in groups, return to coding after a break, or make the coding more enjoyable.

Import Cost

As its name suggests, import cost lets you see the size of an import package. As a result, you can import less space-consuming packages in the code and make the overall size of the code small.

Peacock

Peacock is quite a little plugin that helps you change the colors of your VS code environment. It enables you to identify each application window if you are working on different windows spontaneously. Moreover, changing colors can help you bring something new to your application and doesn’t let you feel bored during long coding sessions.

Shortcuts of Visual Studio Code

- Quick Fix

Button: Ctrl + .

Result: VS code has the built-in function to rectify small mistakes in the code. For example, if you forgot to close the parentheses or put a semicolon (;), this shortcut will automatically fix the mistake.

- Show a Function’s Signature

Button: Ctrl + Shift + Space

Result: Programmers and developers usually forget the signatures of even the most used functions. Therefore, this shortcut helps them see parameter hints for a function’s signature.

- Open a File

Button: Ctrl + P

Result: Opens a specific file from a massive project that contains multiple files.

- Change the Names

Button: Ctrl + Shift + L

Result: This shortcut helps you to change the name

- Toggle Sidebar

Button: Ctrl + B

Result: This shortcut helps you hide the sidebar when you aren’t using it and show it when you need it.

- Cut White Spaces

Button: Ctrl + K + X

Result: This shortcut lets you cut the white spaces at the end of lines to make the code easy to understand.

- Add Cursors to All Matching Selections

Button: Ctrl + Shift + L

Result: Select a text and use this shortcut key to add the cursor to all the matching selections. Then, you can change the selection command once, and the whole matching selection commands in the files will be changed.

- Go to a Specific Line

Button: Ctrl + G

Result: This command can be used to navigate to a specific line. Just type this command and enter the line number, and the cursor will directly jump to that line.

- Shutdown All Running Editor Tabs

Button: Ctrl + K+ W

Result: If you have run several editor tabs while writing or editing your code, this command will help you clear all the running editor tabs.

- Detect Programming Language

Button: Ctrl + K and then M

Result: The VS code detects the language of the code by checking the extension. However, it can sometimes detect bad language due to unsupported extensions. You can use this shortcut to let the editor detect the correct programming language.

- Fold Code Block

Button: Ctrl + Shift + [

Result: If you want to focus on a specific code block while writing, editing, or debugging, this command will help you fold all the other code blocks to keep your senses in a particular block.

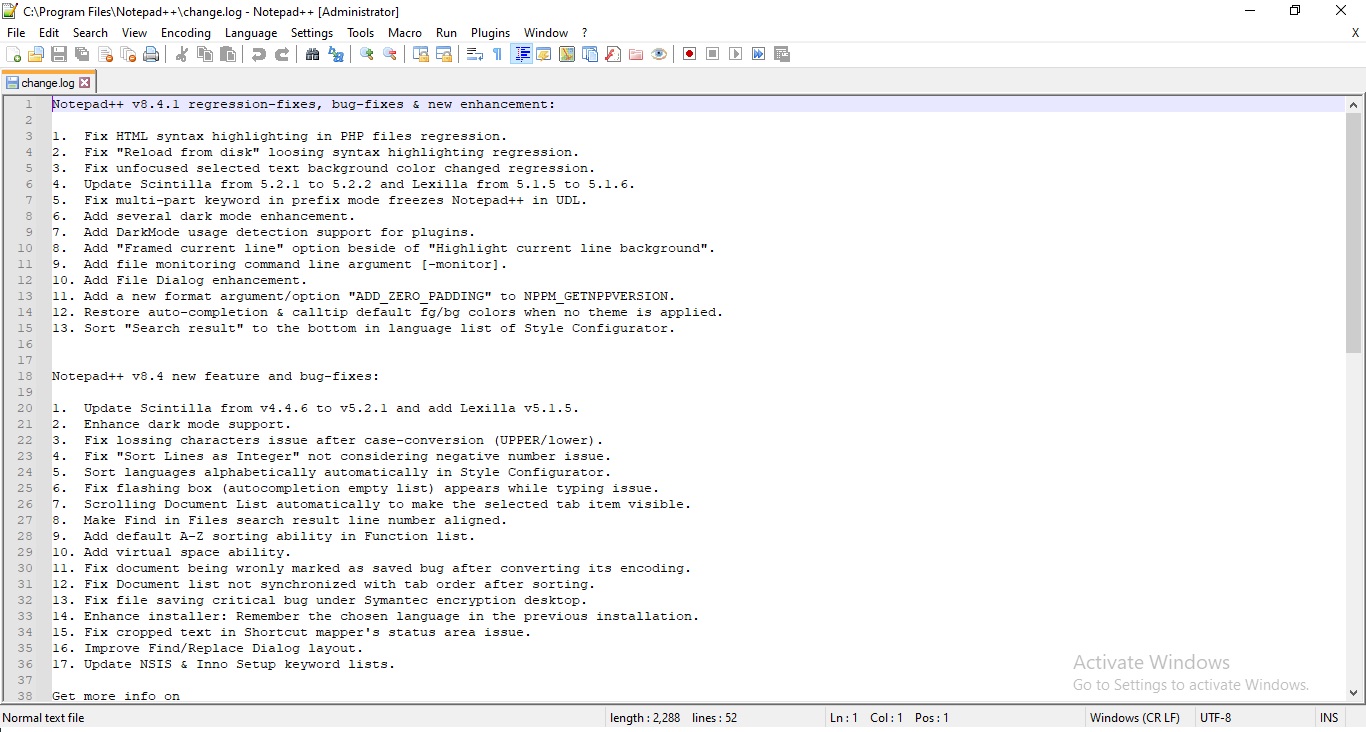

#2 NotePad ++ — Best for Light-Weight Coding Projects

The code editor Notepad++ is quite famous. One of the reasons for its popularity is that it is open-source software that is free and GPL-licensed. However, due to its simple and easy-to-use interface, it is one of the favorite editors of most programmers and developers. It doesn’t try to replicate VS Code or any other code editor.

Those who aren’t much familiar with coding will find everything they want in this editor. Not only for newbies but developers of all levels, Notepad++ is a fantastic, easy-to-use solution. Moreover, due to its lightweight, it can smoothly run on many lower-end devices, making it unique.

Installation Link: Download

History

Notepad++ was first introduced back in September 2003 by Don Ho. Ho used an old-school Java editor for programming, but due to his poor experience, he started to build a text editor written in C++.

Notepad++ was initially launched as a Windows-only software on SourceForge on November 25, 2003.

It’s modeled on the Scintilla editor element, and it’s built-in C++ with just Win32 API calls and the STL to improve efficiency and minimize program size.

US-based open-source initiative hosts were needed in January 2010 to prohibit entry to Cuba, Iran, North Korea, Sudan, and Syria to meet the US regulations.

In June 2010, Notepad++ stepped out of US legal boundaries by publishing a copy on TuxFamily, in France, protesting what the creator saw as a breach of the available and open-source software (FOSS) concept. Until 2015, several Notepad++ community services (such as the forums and bug tracker) were hosted on SourceForge.

Reasons to Use

Editor for Everyone

Notepad++ is one of the lightest code editors on the internet. In 2022, it can run on a Pentium 4. Moreover, other hardware and software requirements are even lower than today’s standard RAM, ROM, and storage sizes. It is available for free, and the file is a mere 51 MB.

Customizable GUI

The graphical user interface of Notepad++ is entirely customizable. You can set up the whole GUI according to you and make coding more accessible and fun.

Huge Plugin Support

Despite being so lightweight and space-efficient, Notepad++ has a big room for plugins. You can add multiple plugin options to your editor and increase its functionality. However, keep in mind that adding a plugin will make the editor heavier.

Syntax Highlighting

The syntax highlighting feature of Notepad++ makes it the favorite editor for newbies. As a beginner, every developer and programmer faces issues with correct syntax. However, Notepad++ highlights incorrect syntax and makes it easier for you to rectify your mistakes.

Multi-Language Support

Along with multi-language support, this editor also gives a multi-view option to let you see through different windows. It makes coding even easier.

Reasons to Avoid

- The written code may look confusing at the first sight

- There is no option to compile code

Supported Plugins

Auto Indent

This plugin helps the coder make the code readable for other people. The indented code is often easy to read and understand for somebody who hasn’t written the code. On the other hand, jangled code is hard to read and not neat.

Autosave

This plugin can be a life-saver at times. Just in case you are coding, you haven’t saved your code in the source file, and your PC turns off, this plugin can help you retrieve your code back. Moreover, you can also select the autosave time interval, e.g., 5-minutes or 10-minutes.

Compare

This simple plugin lets you see the difference between two code files by comparing them.

X-Brackets Lite

This plugin lets you complete an open bracket character. Not completing the bracket can cause problems during the compiling of code, and this plugin automatically completes and closes the open bracket.

Snippet Plugin

The snippet plugin comes in handy to add multiple most-used snippets to make their coding experience easier and more fun. If you use this plugin, you won’t need to memorize complex code structures and syntaxes.

Shortcuts of Notepad++

- Switching

Button: Ctrl + T

Result: If you want to switch lines with one another to make the code look more manageable and efficient, you can use this shortcut.

- Block Comment

Button: Ctrl + Shift + K

Result: This shortcut helps you block a comment so that it won’t cause problems during the compilation.

- Launch CallTip ListBox

Button: Ctrl + Space

Result: If you have forgotten the signature of a function or method while writing the code, this shortcut can help you launch the CallTip ListBox to solve this problem.

- Launch Function Completion Listbox

Button: Ctrl + Shift + Space

Result: You can use this shortcut key to launch the function completion Listbox when the function has ended.

- Incremental Search

Button: Ctrl + Shift + l

Result: Using this shortcut key, you can progressively search for and filter through text. Incremental search saves a lot of time and effort.

- Trim Trailing

Button: Alt + Shift + S

Result: If you add two terms to a function and the terms have trailing spaces, this shortcut will trim that trailing space and also save it.

- Hide the Lines

Button: Alt + H

Result: The line hiding shortcut comes in handy when you don’t want your code to get copied or stolen. Moreover, hiding lines won’t affect the final results; the compiler can still read the hidden lines.

- PHP Help

Button: Alt + F1

Result: If you are stuck somewhere in the code and don’t know the algorithm to apply or the way forward, this shortcut can help you find the way out with PHP.

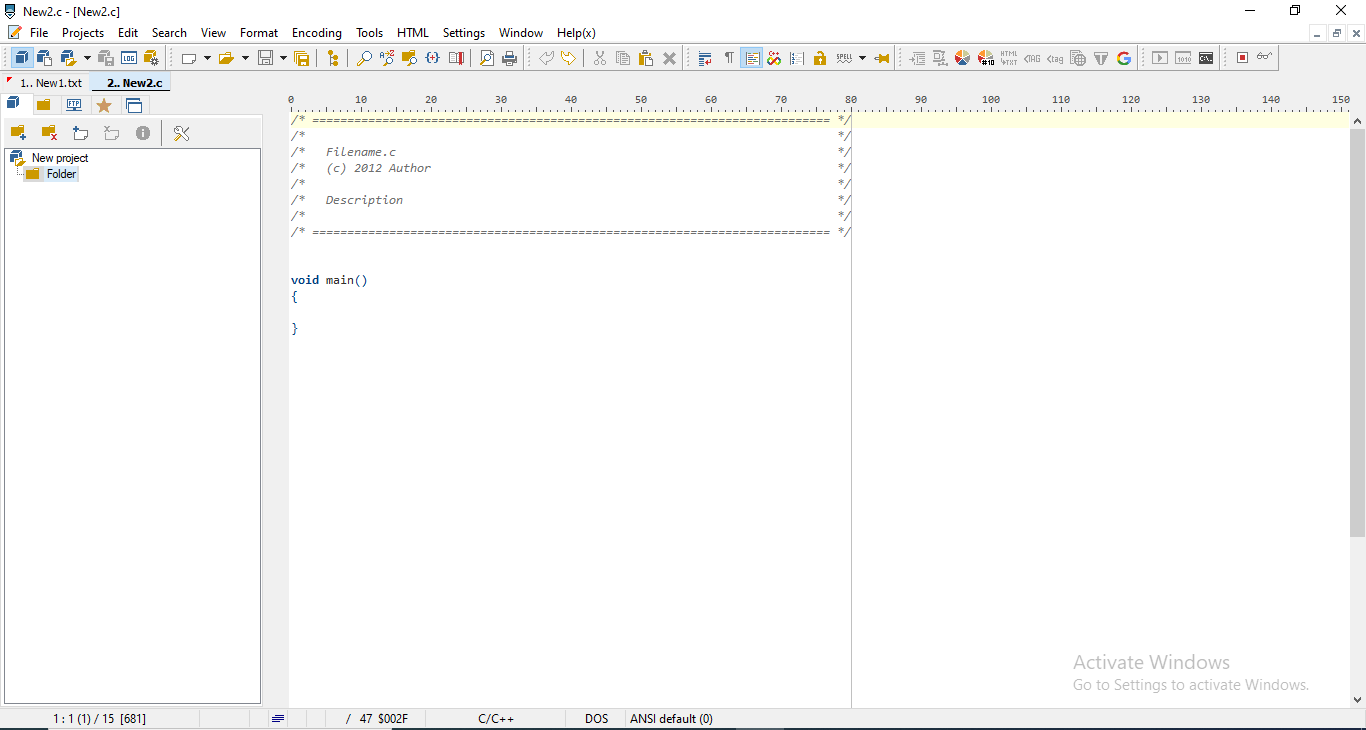

#3 PSPad – Best for Quick Code Editing

PSPad is a code editor that is available for free. PSPad is available for only Windows. This code text editor is suitable for those who deal with text format. It also includes a grammar checker and a variety of customization features.

Build websites – as a web publishing assistant, PSPad offers a variety of time-saving features. PSPad collects and decodes compiler results, incorporates external help files, checks editions, and more for those who want an excellent code editor.

PSPad does not require any modification after downloading, and it is ready to use right away. With syntax highlighting, the editor covers various file formats and languages. Macros, clip files, and templates are also available to manage repetitive processes.

Installation Link: Download

History

PSPad first came out in 2001. It was made by Czech developer Jan Fiala, especially for Windows. Since its release, it has had many useful features such as syntax highlighting and Hex editing.

The developer of this editor made it highly portable because it does not need any installation. You can just download this editor, run it and start coding.

Reasons to Use

Supports Many Programming Languages

Despite being a lightweight editor, PSPad can support several programming languages. You can write codes in both front-end and back-end development languages. Other common supported languages include Fortron, Cobol, C++, C, Java, Javascript, PHP, Python, etc.

Built-in Templates

The PSPad editor includes templates and layouts for many programming languages. These layouts don’t need to be added remotely. These templates can assist you in creating an application. You can have immediate access to a programming language’s methods and syntax. This feature is only available for commercial editors, but PSPad provides it free.

Spell Check

We ignore the spelling of things when writing since we know predictive text and auto-correct will spot and fix our errors. Likewise, the spelling check feature in the PSPad editor allows you to find and underline your misspelled words.

However, you must first acquire a relevant language dictionary from the PSPad text editor’s official site and add it to your installer.

Color Codes

If you’re creating a UI from a program using computer languages, you’ll need to add color codes to the program to use various shades. Every shade has its code. Coders often look up the color names on Google and then look for the corresponding codes for seeing color codes.

However, this procedure is eased with the integrated color code inserting functionality in the PSPad editor.

Built-in Command Line Execution Function

The PSPad editor has a built-in command-line execution feature. You don’t have to launch the command prompt directly if you want to run any command or program with it. You may run your code using the built-in command prompt.

Reasons to Avoid

Crashing Error: The editor sometimes crashes without any warning.

Not Many Modification Options: In part, the modification options provided by modules are not as extensive as those provided by other editors.

Supported Plugins

Any Tag Close

This extension can help you automatically close HTML, XML, XSL, and some other arbitrary pair tags.

ASCII Set Generator

This extension helps you to generate printable sets of ASCII characters. Moreover, it can generate characters from 32 to 255 used for font design and testing. You won’t have to go through a long list of ASCII codes.

Insert SQL Statement

It connects to a database and puts a code block (ASP/VBScript syntax) into the current content depending on the column names of the specified table. This is an easy method to insert SQL statements with less time and effort.

Count Words

Counts the number of words in the current document or code’s chosen text. Moreover, this plugin doesn’t work with old builds of the PSPad. Therefore, make sure you have the latest version of this code editor.

Create Tag

Using this plugin, the term to the left of the pointer is converted to a tag, and the pointer is moved between both. There is no ending tag inserted if the term finishes with a space. An XML/XHTML styled non-closing tag is inserted if the term ends with a “.”

Shortcuts of PSPad

- Select-String

Button: Ctrl + Shift + ‘

Result: This keyboard shortcut only selects the strings in ordered sequences from the whole code. After the selection, you can edit or remove the strings.

- SelMatchBracket

Button: Ctrl + Shift + M

Result: This shortcut enables the bracket matching feature of the PSPad. This syntax highlighting feature highlights the same sets of brackets or parentheses in languages like Java, JavaScript, and C++.

- Open in Hex Editor

Button: Shift + Ctrl + O

Result: This shortcut opens the code in the hex editor. While in the hex editor, you can manipulate, add or remove any fundamental binary data.

- Indent Block

Button: Shift + Ctrl + L

Result: This shortcut can help you insert the block indent at a suitable place in the code. Block indent is a dependent clause or loop in a code indented to make it simpler to understand and execute.

- Matching Bracket

Button: Ctrl + M

Result: This shortcut can help you find the matching bracket. Place the cursor on a bracket and then press Ctrl + M to see all the matching brackets and edit or remove them.

- Code Explorer Window

Button: Shift + Ctrl + E

Result: Hit this shortcut to open the code explorer window and see all the objects in your code, including their procedures, methods, and properties.

- Word Wrap Lines

Button: Ctrl + W

Result: The use of this shortcut allows you to start a new line when the last line is complete such that each line fills inside the viewing window, enabling text to be viewed from top to bottom without having to scroll horizontally.

- Clipboard Monitor Window

Button: Alt + M

Result: This shortcut can help you open the clipboard monitor window. This window has a copy of every single bit of data in the code, and you can access it for easy editing and making the code more efficient.

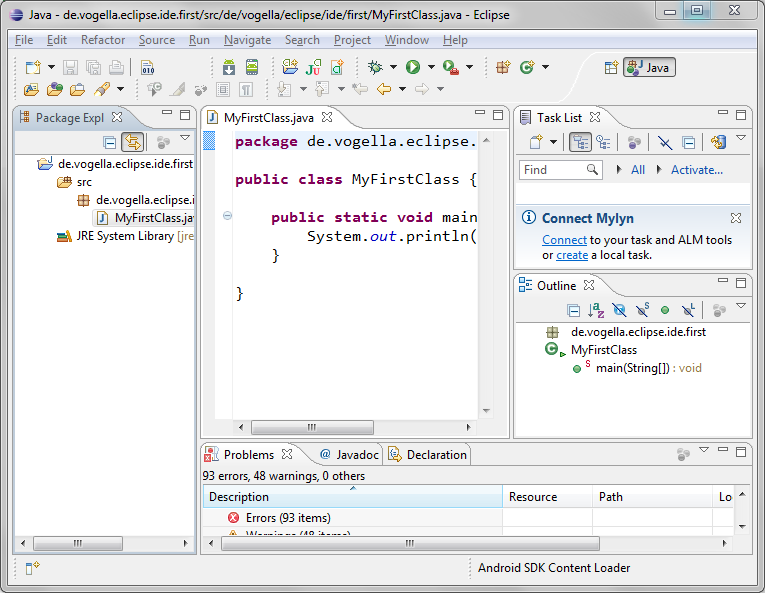

#4 Eclipse – Best for Java Developers

Eclipse is a free and open-source editor that both newbies and experienced coders can use. The Eclipse began as a specialist Java editor, but it now provides several features due to many plugins and add-ons.

Eclipse’s basic version includes Java and Plugin Developer Tooling, developer and debugging tools, and GIT/CVS compatibility. Moreover, several additional packages are available, including tools for graphing, modeling, analyzing, testing, and creating graphical user interfaces.

The Eclipse Enterprise Client provides users with access to a vast collection of modules and information created by a growing group of developers and programmers.

Installation Link: Download

History

To support the creation of Eclipse as an open-source code editor, a collaboration with a panel of stewards was founded in November 2001. At that time, IBM was expected to invest roughly $40 million in making the Eclipse code editor.

Borland, IBM, and some other big names were the founding members. In the last quarter of 2003, the number of stewards had risen to about 80. The Eclipse Foundation was founded in January 2004.

The OSGi Service Platform requirements were chosen as the execution framework in Eclipse 3.0 (launched on June 21, 2004).

On April 26, the Association for Computing Machinery awarded Eclipse with the 2011 ACM Software Award.

Reasons to Use

Tons of Plugins

There are several plugins available for Eclipse. Moreover, the users may create their unique Plugin Development Environment (PDE) with Eclipse. With time, the launch of new and advanced plugins has made Eclipse extremely powerful.

You can customize the whole editor according to your needs with different plugins. However, make sure to check the specs of the plugin because they can make the Eclipse run slow if you don’t have an advanced PC.

Supports Modeling

The best part about the Eclipse is that it supports modeling and makes the whole programming experience easy and less time-consuming. With modeling, you can make the classes, packages, and associations and represent them with their graphics. If you have to work on a lengthy code, modeling can substantially bring down the effort and consumption of time.

Good for Heavy Coding

The interface and architecture of Eclipse are designed to facilitate heavy coding and massive projects. So, if you or your team are handling a massive project, it would be easier to work on Eclipse than on any other code editor.

Moreover, a wide choice of plugins is why this code editor is ideal for heavy coding and large projects.

Supports JDK

JDK (Java Development Kit) is made and distributed by Java. It includes the Java and java machine specifications and helps code a Java application by suggestions and debugging. Eclipse is the ideal code editor to integrate JDK and make your Java coding journey easier.

Automatic Error Reporting

The eclipse editor has a built-in feature that enables automatic error reporting. It highlights the syntax, class, or any other error in the code even before compiling so you can fix it right away and save your time. This feature comes in handy during long coding sessions where a single mistake can cost you time.

Reasons to Avoid

Slow Performance: Due to a lot of plugins and insufficient memory allocation, the performance and speed of the editor are compromised.

Supported Plugins

Spot Bugs

As its name suggests, it is an open-source plugin that finds out bugs in Java code. The coder’s community maintains this plugin, and it scans your code through a pattern of 400 different bugs. Along with scanning for multiple bugs, this plugin also suggests solutions to these bugs.

Maven

Apache Maven is a project object model (POM)-based computer program management and analysis tool. The plugin allows you to manage any Java-based program’s development, debugging, and reporting from a single location.

Tabnine

Tabnine is an AI auto-completion tool that assists programmers in writing better and quicker code. Tabnine combines millions of open source applications with the backdrop of your code to provide code recommendations as you write.

CodeMix 3

If you’re seeking to make web applications and layouts, CodeMix is an excellent place to start. It introduces several VS Code functions to Eclipse, allowing you to build in multiple frameworks from within Eclipse. Additional features, like e-learning programs, are also accessible right in your IDE.

Groovy Development Tools (GDK)

The Groovy software program is much more complex than just an Eclipse plugin, but it’s worth knowing as a program in developing comprehensive testing in your code editor. GDT has heavy code editor capabilities with operators, tools, material assistance, testing, restructuring, and indexing.

Shortcuts of Eclipse

- Search Class in the Code

Button: Ctrl + Shift + T

Result: This plugin can help you find out and distinguish classes even in a complex Java code. This plugin can find classes from both Jar and the application.

- Find Resource File Including Config XML Files

Button: Ctrl + Shift + R

Result: It works the same as the search class in the code plugin. However, the functionality of this plugin isn’t limited to Java only. But you can find other files, including XML, configs, and many others.

- Quick Fix

Button: Ctrl + 1

Result: This shortcut can help you fix minor errors like missing semicolons or any import errors in the blink of an eye.

- Organize Imports

Button: Ctrl + Shift + O

Result: Another Eclipse keyboard shortcut for repairing lost imports is this one. This is especially useful if you’re copying and pasting code from some other file and need to import all variables.

- Toggle Between Supertype and Subtype

Button: Ctrl + T

Result: To toggle between supertype and subtype, it is always better to use this direct shortcut and save your time.

- Search for References

Button: Ctrl + Shift + G

Result: This shortcut looks for references to the particular procedure or variable in the whole code.

- To Add Javadoc

Button: Alt + Shift + J

Result: Instead of manually adding Javadoc in the Java source file, you can use this shortcut and save time.

- Auto-Formatting

Button: Ctrl + Shift + F

Result: Formatting is one of the least favorite parts for every coder. However, you can use this plugin to avoid the hassle and auto-format.

#5 TextWrangler – Best Code Editor for Beginners

TextWrangler is one of the most popular Macintosh freeware text and code editors. This program was started primarily for the requirements of Web editors and computer programmers, and it includes several high-performance functions for word processing, finding, and modification.

TextWrangler’s best-in-class features, such as grep pattern recognition, browse and replace throughout multiple documents, feature navigation, and syntax coloring for a variety of open source languages, including code folding, FTP, and SFTP open and save, AppleScript, and much more, are all accessible through an interactive design.

Installation Link: Download

History

In 2003, Bare Bones released TextWrangler, a professional text editor with an upgraded version of BBEdit Lite, but it was never updated. Later, TextWrangler 2.0 was launched free of cost. In 2016, BBEdit 11.6 included a free version that mirrored TextWrangler’s features, and Bare Bones discontinued TextWrangler support and assistance in 2017.

TextWrangler, like BBEdit, is a simple text editor with the limited format and styling choices.

Reasons to Use

Syntax Highlighting

The syntax is one of the most challenging things to remember for any coder. However, the text wrangler makes it easier for you with its built-in syntax highlighting feature. Moreover, it will also suggest the correct syntax against the highlighted one.

Easy to Use and Install

Text wrangler is a free-to-use code editor that can easily download from the official website. Moreover, it is incredibly lightweight, and you won’t have to wait too long to install it. Other than that, the whole editing experience with this editor is like a walk in a park.

Lightweight

This code editor is extremely lightweight if you use it without any heavy plugins. It can even run on outdated Mac PCs and laptops. However, it is recommended to use it on an average specs PC for smooth operation.

Auto-Completion

Auto-completion is a very useful feature coder always crave. Auto-completion lets you insert functions and classes in a blink while minimizing typing or syntax errors. This feature comes in handy when working on a massive project where a single second of delay can be costly.

Reasons to Avoid

- Lack Of Features: The TextWrangler isn’t well-equipped like some of its rivals

- No Plugins Support: TextWrangler does not support any plugin

TextWrangler Shortcuts

- Cycle through Windows

Button: CMD + ‘

Result: If you are coding on multiple windows spontaneously, this shortcut will quickly help you cycle through them.

- Find Misspelled Words

Button: CMD + ;

Result: Misspelled words can disturb your code editing process. Therefore, it is better to find and correct them with this shortcut.

- Hard Wrap

Button: CMD + \

Result: This shortcut is used to Hard Wrap, the code text in the editor.

- Open Counterpart

Button: CMD + Opt-Up-Arrow

This shortcut is used to open the counterpart of the selected class or function

- Set Breakpoint

Button: CMD + Shift + L

Result: Setting a breakpoint is one of the experts’ most popular debugging techniques. Breakpoint means the point where you want to stop the debugger execution.

- Increase or Decrease Paragraph Indent

Button: CMD + Ctrl + Right-Arrow (For Increase)

CMD + Ctrl + Left-Arrow (For Decrease)

Result: These shortcuts increase or decrease the paragraph indent of the code lines.

Some Bonus Code Editors

We did intense background research while compiling our list of the five best code editors for developers and programmers. We evaluated every editor against specific benchmarks and only chose the top 5. However, we had to leave some editors out with a heavy heart due to the limitation of choices.

You can say that the below-mentioned code editors are the runner-ups that almost made it to our list. Here are those options:

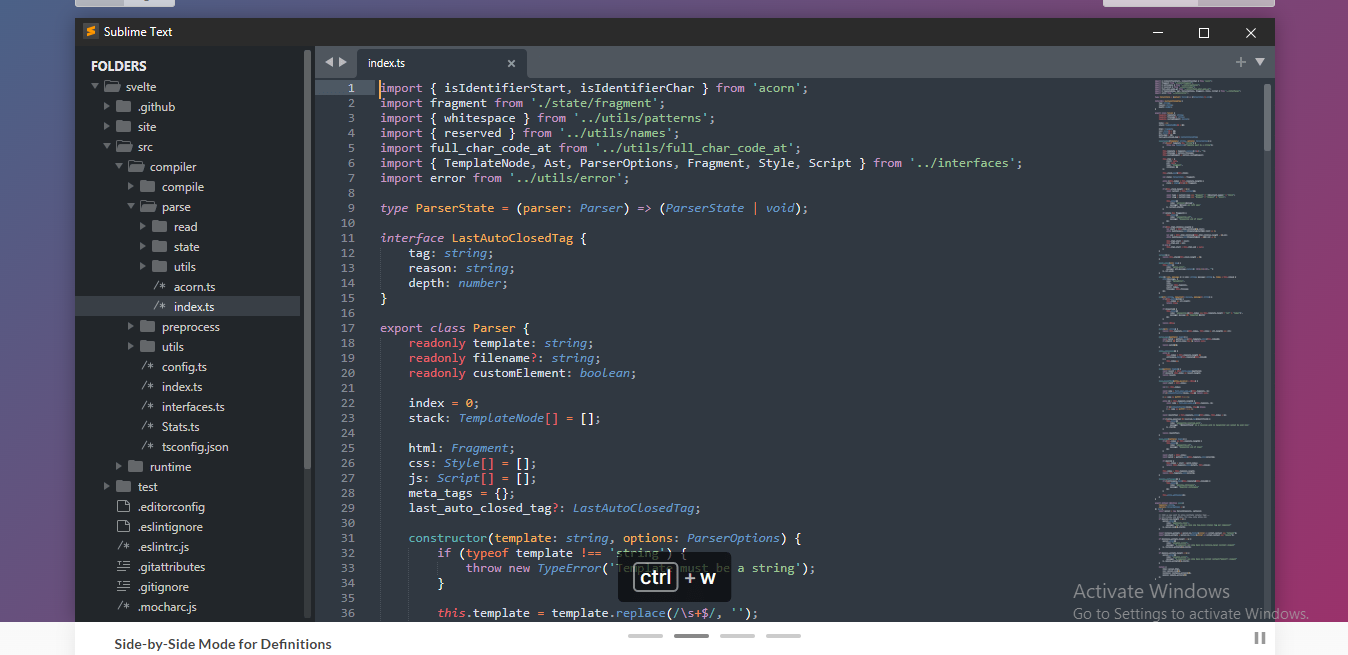

#1 Sublime Text

The sublime text almost made it to our list, but due to its limited support of languages. This code editor is designed to handle massive coding files and projects. You can run a 7 MB file or 200,000 lines.

Installation link: Download

Supported Languages: Python, C#, C++, and more

Supported OS: Windows, Mac, Linux

Price: Free

Best For: People with heavy coding tasks

#2 Atom

Atom is a powerful text editor with real-time collaborative capabilities. Teletype, which is still in beta, allows developers to collaborate on code.

This code editor also supports cross-platform input, allowing employees to navigate several computer systems.

Installation Link: Download

Supported Languages: PHP, Java, C language, C++, HTML, COBOL, and more

Supported OS: Linux, MacOS, Windows

Price: Free

Best For: People who like to use shortcuts during coding

#3 Text Mate

TextMate is a simple and easy-to-use text editor. This code editor creates a simple text file by default when you make a new file. Users then choose what sort of document they want to create.

If you frequently deal with a specific language, you may set the default file format to your most commonly used document format to save effort and time.

Installation Link: Download

Supported Languages: Applescript, Python, Java, CSS, PHP, and more

Supported OS: MacOS

Price: Free

Best For MacOS users

How are Code Editors Different from IDE And Text Editors?

Simple text editors have fewer features than code editors. Syntax highlighting and auto indentations aren’t available in simple text editors.

Also, there is a difference between code editors and IDE. For example, debugging features, sample code, and other technical features are included in IDEs to assist programmers, while code editors assist programmers in coding only. It identifies keywords and syntax problems as per computer and programming languages.

How to Choose the Best Code Editor?

Choosing the best coding editor is essential for your whole coding journey. Different coding editors have distinct features, all of which are useful in one way or another. However, some coding editors do not provide much ease of coding, and you should avoid any such coding editor.

To help you make a wise decision, here are all the features you should look for in the best coding editor:

Primary Features

- Syntax highlighting

- Auto-indentation

- Auto-completion

- Brace matching

- Showing line numbers

User Experience: Helps to make the process faster and more efficient

Speed: A code editor should process and compile code faster

Extensibility: A coding editor should be able to add plugins to increase its utility

OS: Consider a code editor which you can use on all OS, including Windows, Mac, and Linux

Language Support: Consider the editor which supports more languages

Price: Some code editors are free. However, others have a price with more features. Have a look at the features beforehand to decide if the price is worth it.

Shortcuts: More keyboard shortcuts mean quicker and easy coding

Error Marks: Choose the editor which marks errors while coding

Conclusion

Choosing a code editor is one of the most important steps before starting your coding journey. A good code editor will improve the efficiency, size, and speed of your coding. Moreover, there are various coding editors, each for a particular target audience.

Overall, Visual studio code is one of the best code editors with powerful plugins. This code editor runs on Windows, Mac, and Linux too.

On the other hand, Notepad++ also gives a tough competition to its rivals. The top 5 best code editors on our list are all worthy of the top position. You just have to assess your needs and then choose the best code editor for you to start your coding journey.