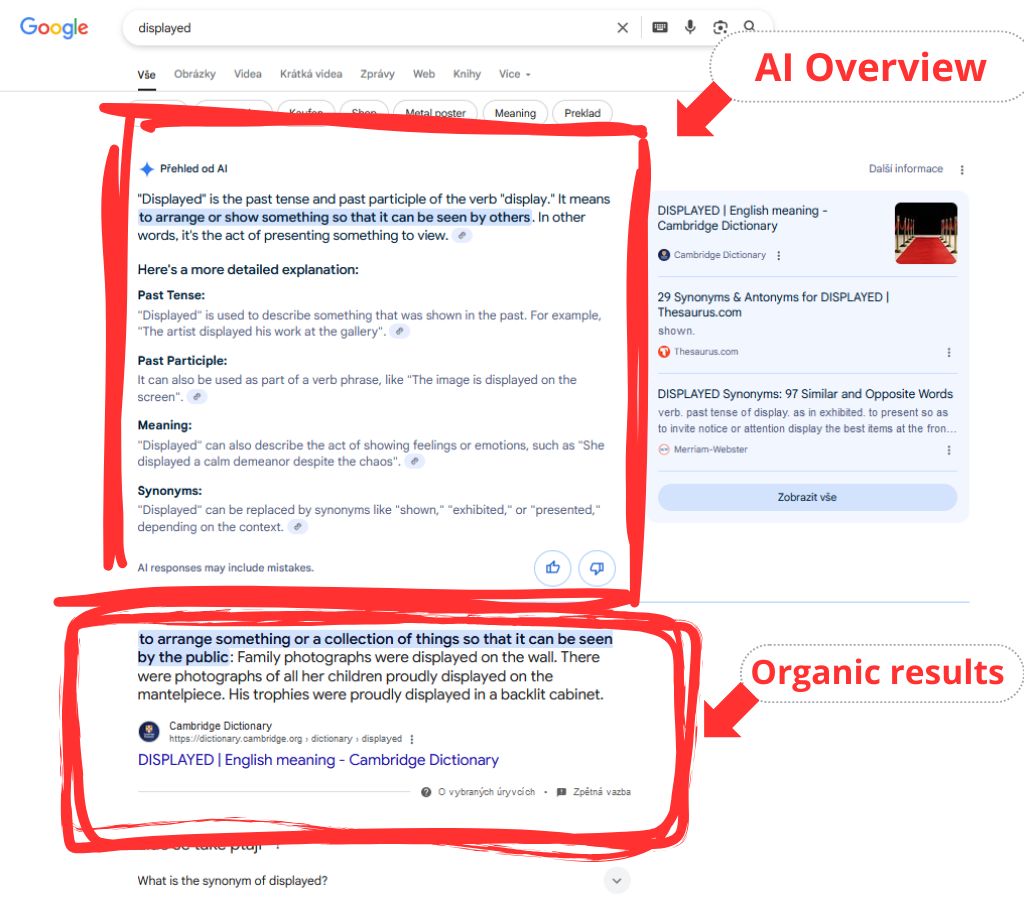

AI Overviews are short, AI-written summaries that now appear at the top of some Google search results. Instead of only showing links to websites, Google uses artificial intelligence to generate a quick answer for the user’s question. This is part of Google’s “Search Generative Experience” (SGE), introduced in 2023 and gradually rolled out in 2024. The goal is to help users find answers faster, without needing to visit multiple websites.

These summaries are not taken directly from one source. Instead, Google uses its large language models (like Gemini) to scan multiple trusted web pages and generate a new, human-like answer. While some source links may be shown below the summary, the main text is AI-generated, not copy-pasted.

How Do AI Overviews Work?

When you type a question into Google, the system does three things. First, it interprets your intent – what you’re really trying to find. Second, it scans Google’s index to find high-quality content from reputable websites. Third, it generates a fresh answer using that content, written in natural language. This process is powered by models trained on billions of pages, which allow it to “understand” and paraphrase content in real time.

The answer is then shown at the very top of the page, even above the traditional “blue links.” This is where AI Overviews differ from older features like featured snippets. Snippets usually quote a single source. AI Overviews synthesize information from many sources and sometimes don’t cite any clearly. This makes the summaries feel more fluid but also more difficult for publishers to track or benefit from.

Why AI Overviews Could Reduce Your Website Traffic

For users, this is a faster way to get information. But for website owners, it means fewer clicks. If the AI answer satisfies the user’s need, they won’t scroll down or click on any search results – even if your website ranks #1. That’s a major shift in how Google Search works.

Websites that provide educational, explanatory, or how-to content are especially at risk. These are the types of pages that AI Overviews summarize most often. Even if your site helped train or inform the answer, users might never visit your page. That means fewer impressions, fewer clicks, and possibly lower ad revenue or conversions.

Early testing by industry tools like Semrush and Similarweb has already shown declines in organic traffic to affected sites. Some publishers report that AI Overviews may reduce visibility for even well-ranking content, especially on mobile.

What It Means for SEO Strategy

The old rules of SEO focused on ranking as high as possible. But with AI Overviews, even the top result may be pushed below the fold. That means you now need to optimize for visibility within the AI-generated content – not just on the results page.

To do that, your content must be factual, clearly structured, and written with expertise. Google has said that it values sources with strong E-E-A-T: Experience, Expertise, Authoritativeness, and Trustworthiness. Structured content with proper headings, answers to common questions, and up-to-date information is more likely to be used as a source by the AI.

It’s also important to focus on branded visibility. If users see your website linked under the AI summary, it still builds trust and awareness, even if they don’t click right away. Monitoring these references is becoming a new form of SEO tracking.

Is What Google’s Doing Even Legal?

This question is becoming more urgent. Google argues that its use of web content in AI Overviews falls under the principle of “fair use,” which allows snippets of copyrighted material to be reused for informational purposes. This is the same legal reasoning search engines have used for years.

However, critics argue that AI-generated summaries are fundamentally different. Instead of quoting, they rephrase and recreate content – often without meaningful attribution. That, they say, goes beyond fair use and threatens publishers’ ability to monetize their work.

In the United States, no major court case has yet ruled specifically on this type of AI usage. But in the European Union, regulators are already examining whether this practice could violate copyright law or distort competition. The outcome of those investigations could shape global policy.

What Should You Do Now?

If your website depends on Google Search for traffic, it’s time to take this shift seriously. AI Overviews are not a small feature – they fundamentally change the way users interact with search results. For many website owners, especially those producing informational or how-to content, this could mean a noticeable drop in organic traffic.

Early studies and industry data suggest that when Google displays an AI Overview, fewer users click through to traditional results – even to the top-ranking pages. The more complete and useful the AI-generated answer is, the less likely users are to scroll down. In some cases, AI Overviews may replace featured snippets, while in others, they appear alongside them, pushing even high-ranking links further down the page. This means your page may still rank well, but earn fewer clicks than before.

This drop will not affect every website equally. Sites focused on navigational, transactional, or branded queries (like product searches, pricing, or direct services) may see less of a hit. But publishers, bloggers, affiliate marketers, and companies that rely on educational or evergreen content are especially exposed. Their content is exactly the type Google’s AI uses to create its answers, often without a click being needed.

So, what can be done to reduce the impact?

Google’s AI Overviews are already changing how users interact with search results – and that has measurable consequences for websites. The core reason why this matters is simple: AI Overviews often satisfy the user’s intent without requiring a single click. That breaks the traditional SEO model, where creating high-quality content and ranking high brought consistent, measurable traffic.

This change means that even if your page ranks in the top 3, you may get significantly fewer visitors than before – because the user got what they needed from the AI-generated summary at the top of the page.

Early data shows that traffic drops are real. In May 2024, both Semrush and Similarweb began publishing reports showing 15-40% click loss for informational queries with AI Overviews present. In sectors like health, science, finance, and tech, these numbers are closer to 45–60% – particularly when Google does not show a clear link to the source. Publishers like The Verge, TechCrunch, and Healthline have publicly stated that traffic from Google has become less predictable since the AI Overview rollout began in the US.

Even Google’s own demo queries in public tests showed reduced visibility for organic results – in some examples, the AI summary pushed all “blue links” below the fold, especially on mobile.

So who will be hit the hardest?

- Sites with content that answers common questions (e.g. “What is compound interest?”)

- Niche publishers and blogs that rely on evergreen traffic

- Affiliate content and comparison posts that Google now summarizes into a paragraph

- Service businesses that rank for “how to” or explainer content tied to their field

Advanced Tactics to Defend SEO and Monetization in the Age of Google AI Overviews

While many standard SEO tips fall short in the face of AI Overviews, there are still advanced strategies you can apply to protect visibility, defend revenue, and future-proof your content. These are not quick fixes — they require effort, structure, and precision — but they give you a real edge in a search landscape where Google is rewriting the rules. Below are specific, actionable techniques that go beyond surface-level advice.

- Audit your most exposed content – manually check where AI Overviews are replacing your traffic. Export your top-performing URLs from Google Search Console based on clicks or impressions. Search each page’s primary keyword in an incognito browser. If an AI Overview (AIO) appears, flag that page as high risk. Create a list and mark which pages need rewriting, restructuring, or support content. This lets you focus your effort where the threat is measurable.

- Target AI-resistant query types – prioritize content that cannot be easily summarized. Avoid building new content around simple factual queries. Focus instead on content that requires human input or context, such as personal comparisons, local reviews, product testing, controversial takes, or emerging legal and industry changes. These are harder for Google to summarize without misunderstanding or oversimplifying.

- Create page elements AI can’t scrape – make parts of your value interactive or protected. Add components that Google cannot extract cleanly into summaries: comparison tables with expandable sections, dynamic filters, interactive tools, gated downloads, and exclusive media. For affiliate content, include data tables that require a scroll or interaction to access the conclusion. This forces the user to visit your site for the full value.

- Create attribution-demanding content – add insights AI cannot legally or ethically claim. Publish original data, quotes from experts, customer surveys, proprietary charts, screenshots from tests, or any type of information that clearly originates from your business. This makes it harder for AI to reuse it without attribution and increases the chance Google will cite you directly. Consider watermarking visuals or using in-content attributions like “Based on 2,100 customer reviews we collected between January–April 2024.”

- Restructure existing articles – keep the answer, but defend the click. Give a short summary or direct answer at the top, but keep details, analysis, and recommendations further down the page. Use clear subheadings to signal depth and separate information into parts AI can’t easily compress. Add expert quotes, unique case studies, or supporting context that distinguishes your content from generic sources.

- Cluster related content – build topic depth to increase source authority Instead of creating single isolated pages, develop multiple pages around a core topic and interlink them. This helps Google’s systems associate your domain with topical authority. For example, rather than one article on “protein powder,” create a structured cluster: comparisons, how to use it, side effects, types, and expert reviews. Internal linking boosts crawlability and sends signals of depth, making you more likely to be used as a trusted source in Overviews.

- Track branded impressions and citations – monitor your presence inside AI Overviews. Start tracking when your site or brand appears underneath AI Overviews, even if it doesn’t lead to a click. Use branded search queries in Search Console and observe whether impressions grow in parallel with lower CTR. Consider visibility inside Overviews as a new SEO KPI alongside rankings and clicks.

- Fortify revenue per visitor – don’t rely on raw traffic to monetize. If your model depends on ad impressions, diversify into formats that reward engagement instead of volume. Focus on email capture, product lead forms, affiliate conversions, or memberships. Test placing calls to action earlier in your content so that even partial scrolls can convert. Identify your top 10 revenue-driving pages and A/B test new monetization layouts optimized for lower traffic but higher conversion.

- Add author identity and credentials – increase your E-E-A-T score. Google’s AI Overviews are more likely to rely on sites that demonstrate experience and authority. Show the real name, photo, and expertise of your author – ideally with links to their credentials or related professional presence. Use author and person schema where relevant. For YMYL (Your Money Your Life) topics like health, finance, or legal, this can be essential to being referenced as a trusted source.

Finally, don’t assume that AI Overviews will show for every query. Google currently displays them mainly for complex or multi-step questions, not for every search. That may change over time, or evolve based on user feedback, regulations, and publisher pushback.

In short, prepare for lower CTR (click-through rate) on some content, but don’t panic. Your role as a content creator isn’t disappearing — it’s just shifting. To stay visible in this new search environment, you’ll need to focus on trust, usability, and precision more than ever before. Being a source for Google’s AI, even without a click, could become the next form of SEO visibility.

Other Sources & Industry Insights for AI Overviews (AIO)

Here you can find other sources related to AI Overviews, including early-stage reports, live examples, and industry commentary. These materials reflect how the feature is being tested, how it behaves in the current search environment, and how publishers and SEO professionals are beginning to respond.

Rich Sanger SEO – AI Overviews Research: Articles, Studies, and Insights

- Link: https://richsanger.com/google-ai-overviews-research/

- A curated list of links to articles, analyses, and real-world examples related to Google AI Overviews. Most of the sources are from 2024, when Google began rolling out the feature, and they document its behavior, initial impact, and reactions from the SEO and publishing communities.

Barry Schwartz – Google AI Overviews Expand Again & Lead To 10% Query Growth (2025-05-21)

- Link: https://www.seroundtable.com/google-ai-overviews-expand-39445.html

- Reports on Google’s announcement at I/O 2025 that AI Overviews are now available in over 200 countries and territories, supporting more than 40 languages, including Arabic, Chinese, Malay, and Urdu. Notably, Google stated that AI Overviews have led to a 10% increase in the usage of Google for queries that display these summaries. Despite this growth, Google has not provided data on how AI Overviews affect click-through rates to websites, and when questioned, declined to comment on this aspect. Additionally, the article notes that AI Overviews are now powered by a custom version of Google’s Gemini 2.5 model, enhancing the system’s ability to handle more complex queries.

Ahrefs Insights From 55.8M AI Overviews Across 590M Searches—A Study by Ahrefs (2025-05-19)

- Link: https://ahrefs.com/blog/insights-from-56-million-ai-overviews/

- Study analyzed 55.8 million AI Overviews (AIOs) across 590 million search queries, revealing that AIOs now appear in approximately 9.46% of desktop keywords and account for over 54.6% of total search impressions. The research highlights that AIOs predominantly surface for informational queries, particularly long-tail and high-volume searches, while being less common for branded or local queries. Notably, the top 50 domains, including Wikipedia, Reddit, and Quora, comprise 28.9% of all AIO mentions, indicating a concentration of visibility among content-rich platforms. The study also confirms a significant impact on user behavior, with AIOs reducing click-through rates by an average of 34.5%, underscoring the need for content creators

Glen Allsopp The State of SEO in 2025 (2025-05-15)

- Link: https://detailed.com/state-of-seo/

- How SEO is being reshaped by AI-driven search interfaces, particularly Google’s AI Mode. Allsopp highlights the rise of new optimization models like Answer Engine Optimization (AEO) and Generative Engine Optimization (GEO), emphasizing that traditional keyword-based strategies are no longer sufficient. The article stresses the need for structured, expert-driven content that aligns with how AI summarizes and ranks results.

Search Engine Journal Google Links To Itself: 43% Of AI Overviews Point Back To Google (2025-05-14)

- Link: https://www.searchenginejournal.com/google-links-to-itself-43-of-ai-overviews-point-back-to-google/546574/

- 43% of Google’s AI Overviews contain links that redirect users back to Google’s own search results, rather than to external sources. This practice contributes to a “walled garden” effect, where users are kept within Google’s ecosystem. Additionally, the study notes that longer and low-volume search queries are more likely to trigger AI Overviews, which appear in approximately 30% of all searches. The findings raise concerns about the implications for publishers and the broader web ecosystem, as Google’s AI-generated answers increasingly reference its own content.

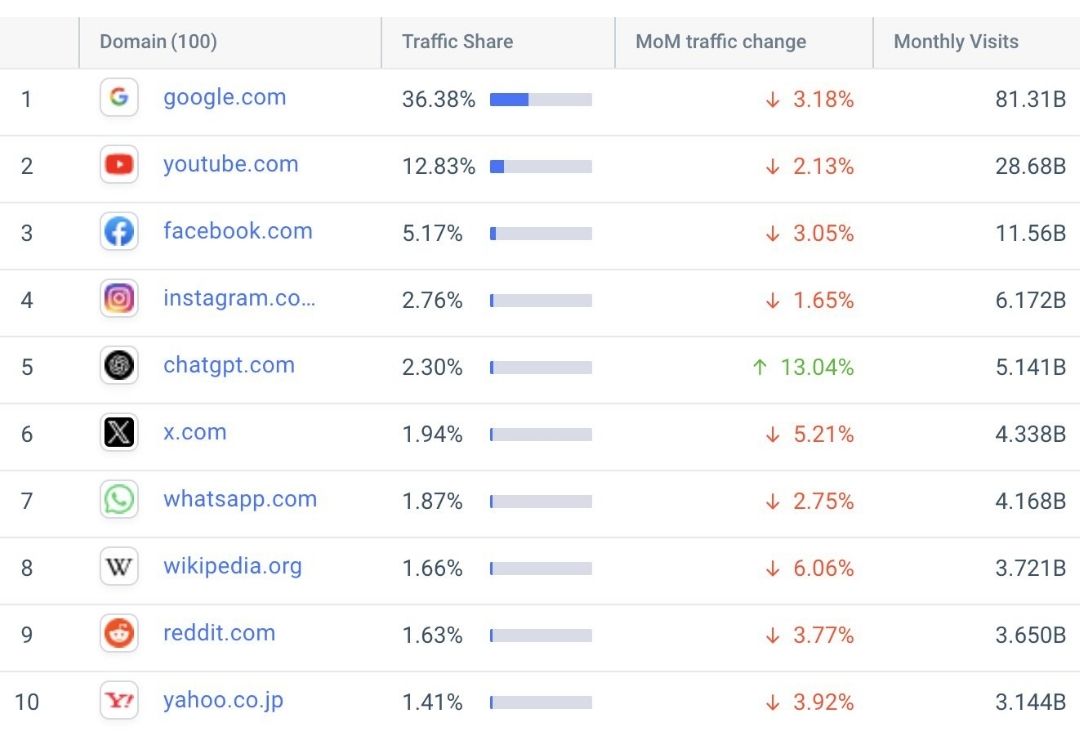

Similarweb – The top 10 websites in the world (total monthly visits), April 2025 (2025-05-09)

- Link: https://x.com/Similarweb/status/1920725435979034861/photo/1

- Highlights the most visited websites globally in April 2025. The list includes major platforms such as Google, YouTube, Facebook, Instagram, and ChatGPT, reflecting their significant user engagement and dominance in web traffic. Notably, ChatGPT has entered the top five for the first time (moved up by 14 positions), surpassing X (formerly Twitter), indicating a shift in user preferences and the growing influence of AI-driven platforms.

iloveseo.net – Why AI Mode will replace traditional search as Google’s default interface (2025-05-08)

- Link: https://www.iloveseo.net/why-ai-mode-will-replace-traditional-search-as-googles-default-interface/

- Gianluca Fiorelli argues that Google’s AI Mode is poised to become the default search interface, moving beyond traditional search results to a conversational, personalized, AI-powered experience. He highlights AI Mode’s integration of features like Gemini’s memory and contextualization, enabling persistent knowledge about users to shape responses. The article discusses the implications for websites, businesses, and SEO, emphasizing the need for structured, annotated content and a focus on being cited in AI-generated responses. Fiorelli also notes the potential risks of hyper-personalization, such as the creation of “search echo chambers,” and the challenges in measuring performance within AI Mode due to the lack of traditional metrics like impressions and clicks.

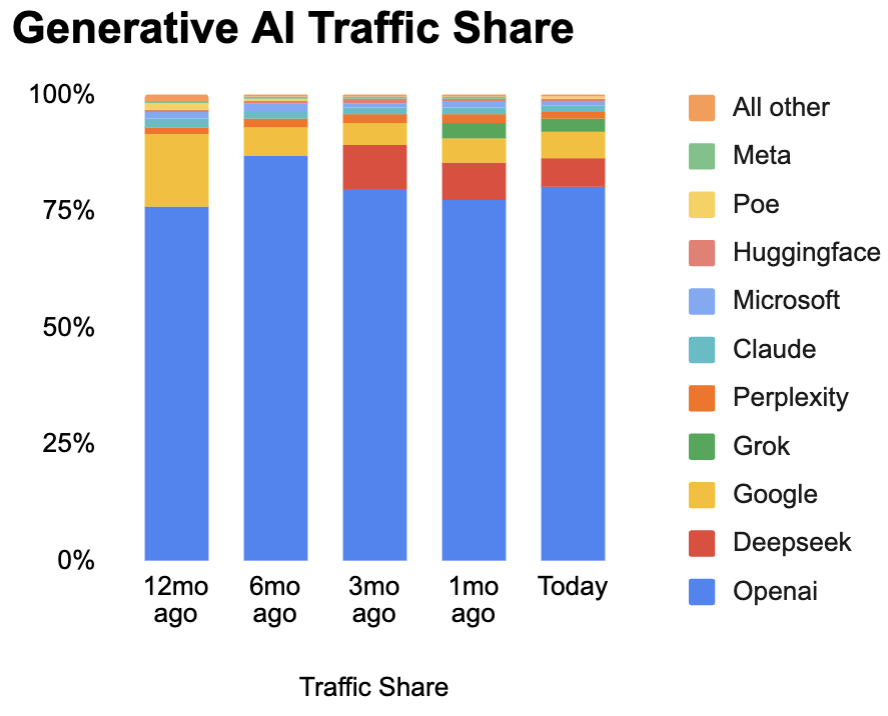

Similar web – GenAI Traffic Share update (2025-05-07)

- Link: https://x.com/Similarweb/status/1920026287625658628

- According to Similarweb’s latest data, as of early May 2025, ChatGPT maintains a dominant position in the AI tool traffic landscape, commanding 80.1% of global visits. Results:

- ChatGPT: 80.1%

- DeepSeek: 6.5%

- Google: 5.6%

- Grok: 2.6%

- Perplexity: 1.5%

Semrush AI Overviews Study: What 2025 SEO Data Tells Us About Google’s Search Shift (2025-05-05)

- Link: https://www.semrush.com/blog/semrush-ai-overviews-study/

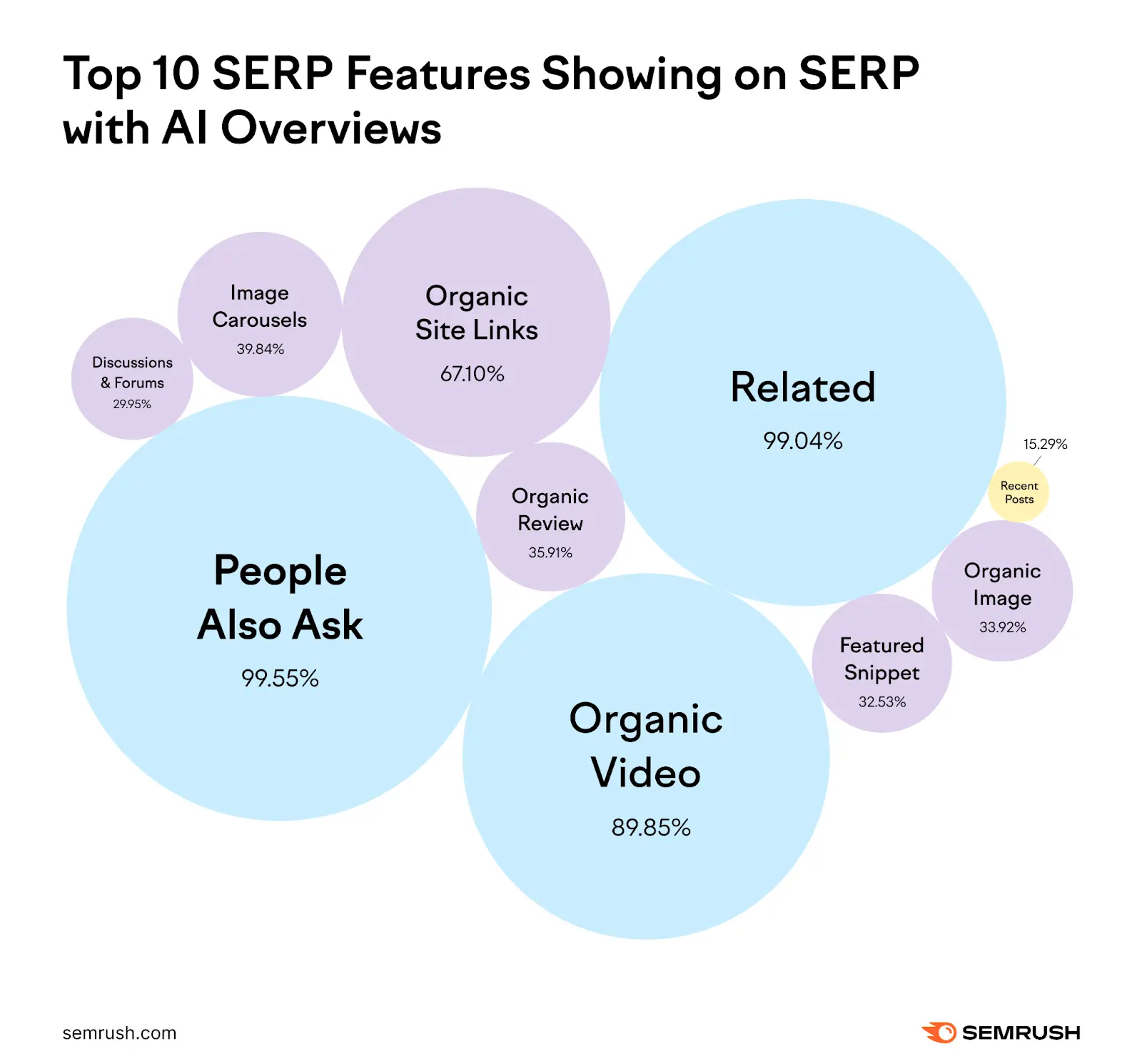

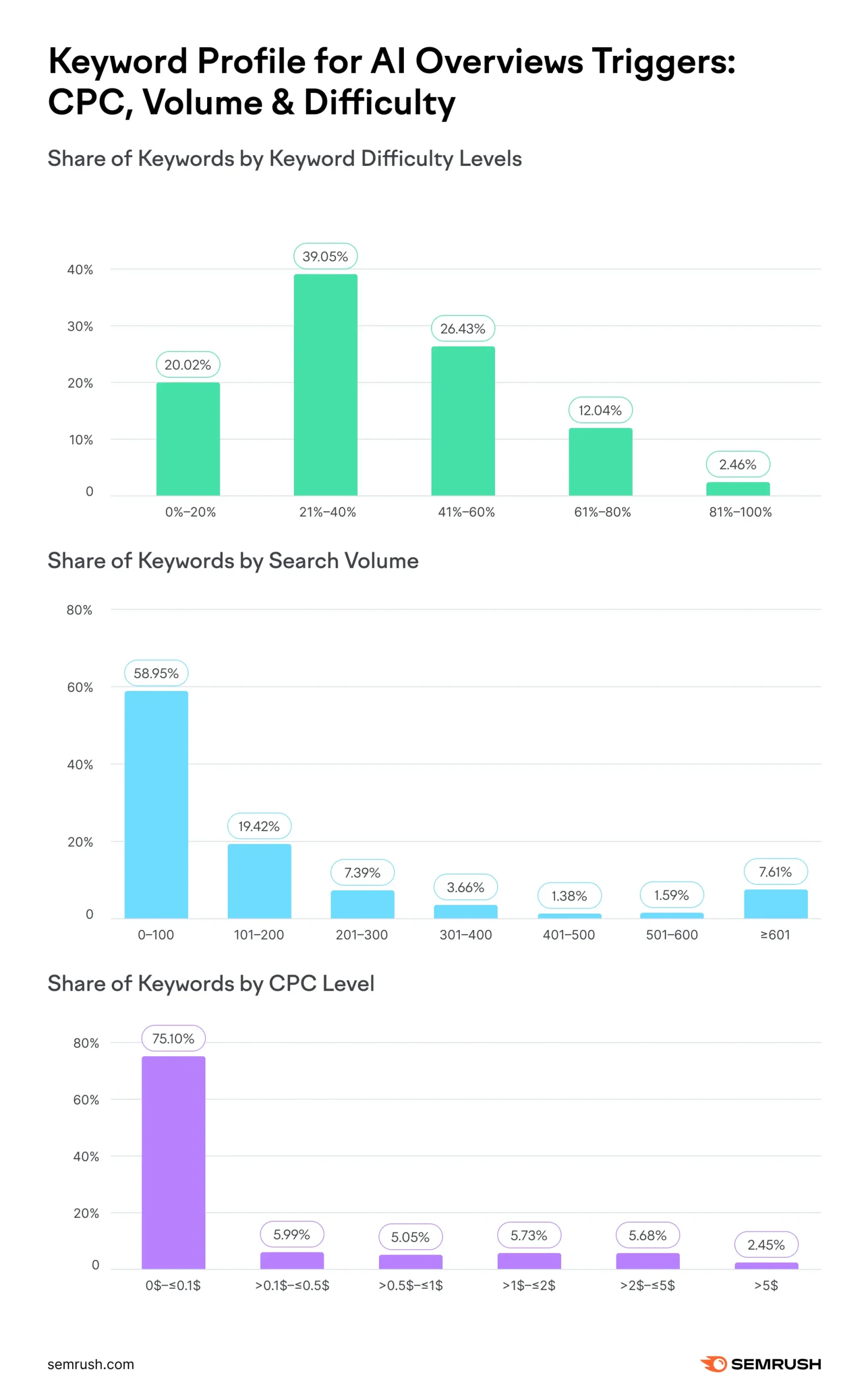

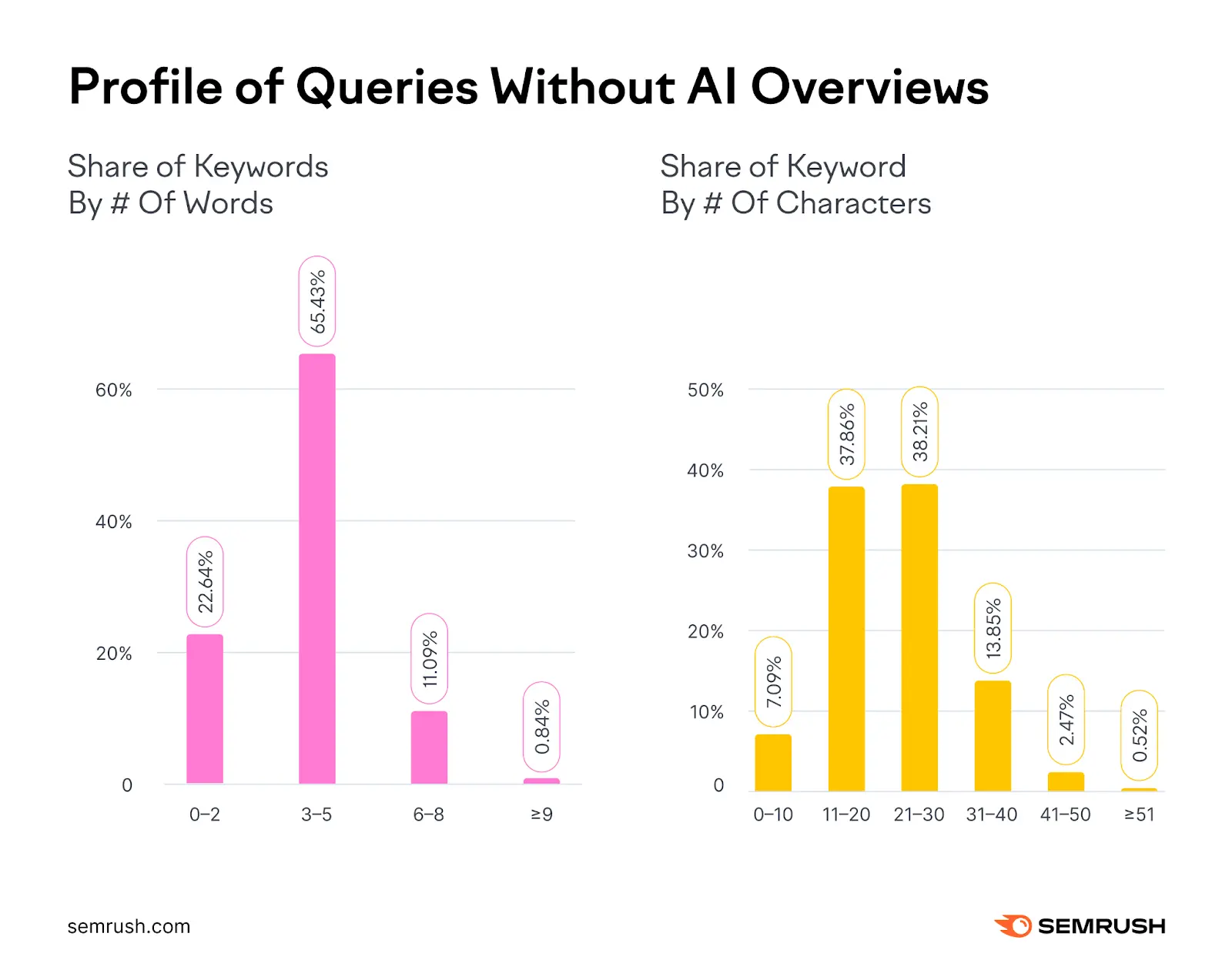

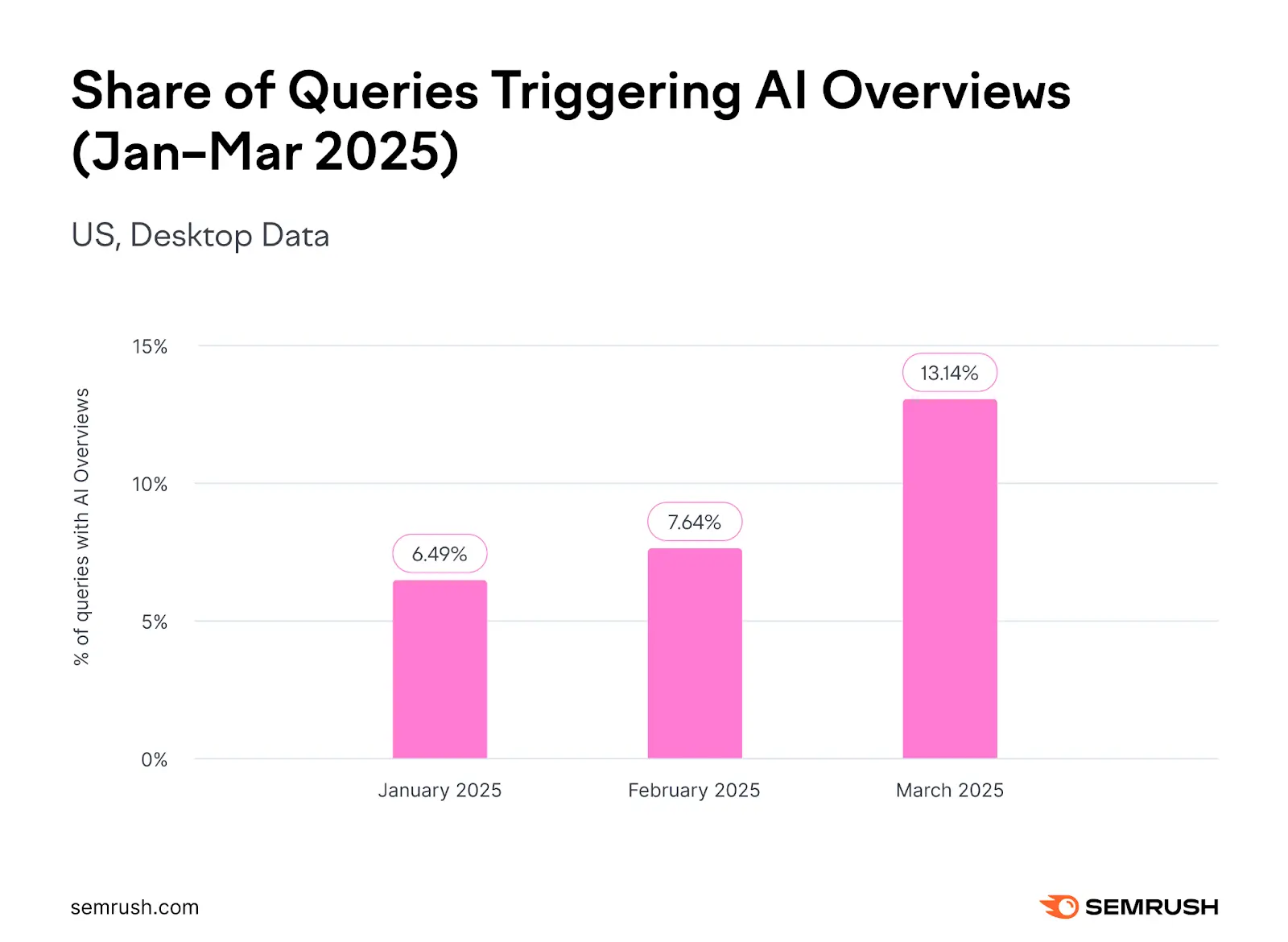

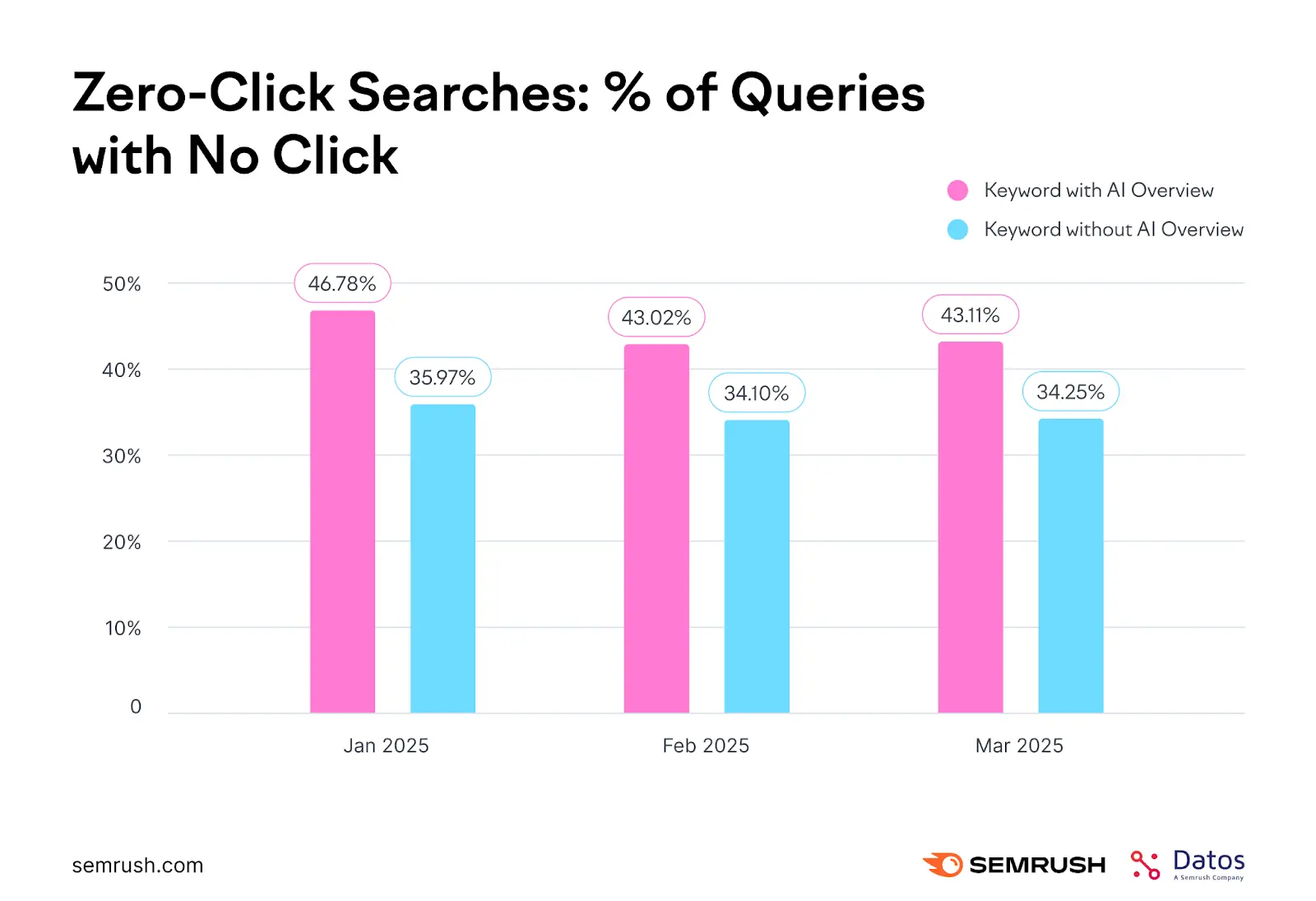

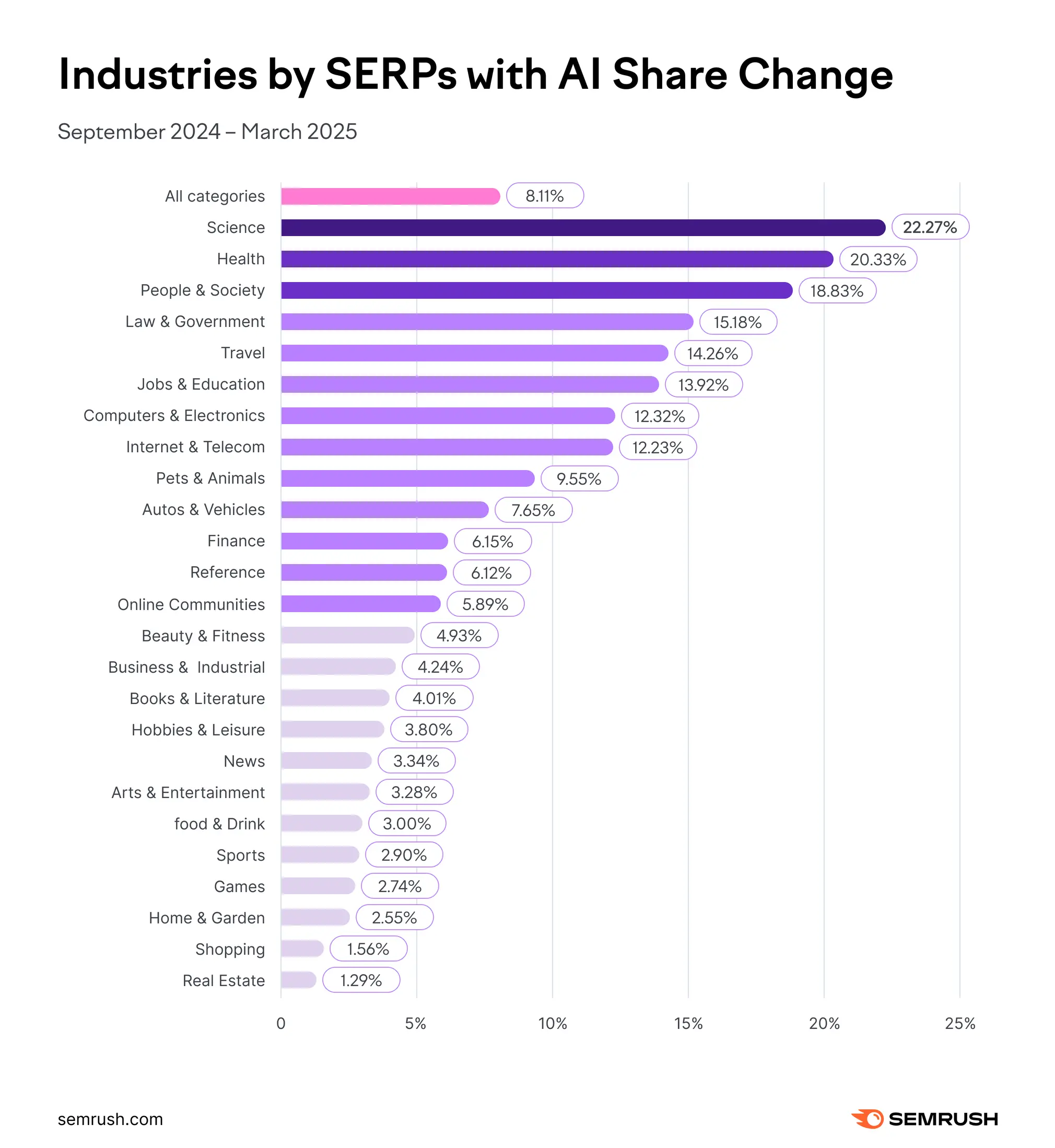

- Probably the best complex analysis on AI Overview now. Semrush’s large-scale analysis of over 10 million keywords is currently the most comprehensive report on the rollout of Google AI Overviews. It provides a clear quantitative view of where and how AI Overviews appear in search. The study shows that the share of queries triggering AI Overviews grew from 6.49% in January 2025 to 13.14% in March 2025. A detailed breakdown by query intent revealed that 88.1% of all AI Overviews appeared on informational searches, while navigational and commercial queries were much less affected. Crucially, the study includes a category-level visibility breakdown, identifying Science, Health, and Society as the most impacted content areas by far. The report also compares zero-click behavior before and after the introduction of AI Overviews and notes a slight but measurable decline in zero-click rates — possibly due to the inclusion of more visible source links. Overall, the findings offer data-backed insight into how AI Overviews are shifting visibility and click patterns across search.

Bloomberg – Google Can Train Search AI With Web Content After AI Opt-Out (2025-05-03)

- Link: https://www.bloomberg.com/news/articles/2025-05-03/google-can-train-search-ai-with-web-content-even-after-opt-out

- Google now claims it can continue using website content to improve AI-powered features like AI Overviews – even if a site explicitly opts out via robots.txt. The company draws a line between “AI training” and “product improvement,” allowing it to sidestep what many see as a clear signal from publishers. In practice, Google ignores AI opt-outs. The famous Google motto: “Don’t be evil”… the Google way. It’s not the first time the company has reinterpreted its own principles. From prioritizing AMP pages to inflating Google Shopping in search results or enforcing opaque rules on YouTube monetization, the pattern is familiar: control the ecosystem first, explain later. In the early 2000s, Google sued the small company Geotags Inc. for using location-based search technology – only to roll out similar services itself shortly after :-). Later, the company used Android dominance to force phone manufacturers into preloading its apps, leading to a €4.3 billion fine from the EU. Even its promise to respect user privacy has frayed, as it quietly replaces third-party cookies with equally invasive first-party tracking under the Privacy Sandbox. And let’s not forget the steady erosion of organic reach in favor of Google-owned surfaces, where zero-click search has become the norm, cutting publishers off from their own audiences. A more notorious example of Google abandoning its “Don’t be evil” mantra is the Terravision case — a story that encapsulates how innovation can be overshadowed by strategic dominance. The German company pioneered early 3D mapping software in the 1990s, long before Google Earth existed. After showcasing their technology — and sharing it with Silicon Valley via a licensing deal — Google later launched Earth in 2005. The backstory, filled with questionable patent filings and closed-door access, led many to believe Google had simply outmaneuvered its original creators, not innovated past them.

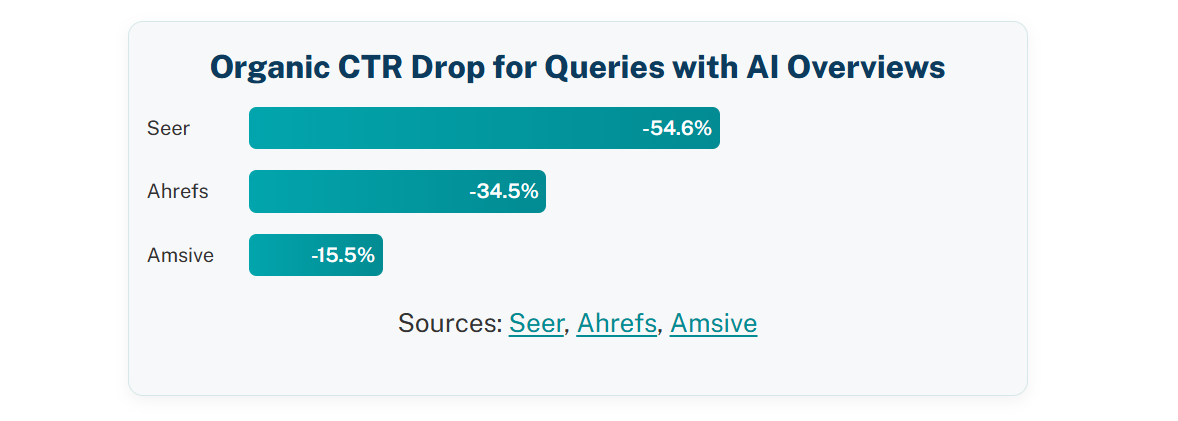

Ahrefs – AI Overviews Reduce Clicks by 34.5% – (2025-04-17)

- Link: https://ahrefs.com/blog/ai-overviews-reduce-clicks/

- Presents a comprehensive analysis of how Google’s AI Overviews impact user click behavior. By examining 300,000 keywords, the study reveals that the presence of an AI Overview in search results correlates with a 34.5% decrease in the average click-through rate (CTR) for the top-ranking page compared to similar informational keywords without an AI Overview.

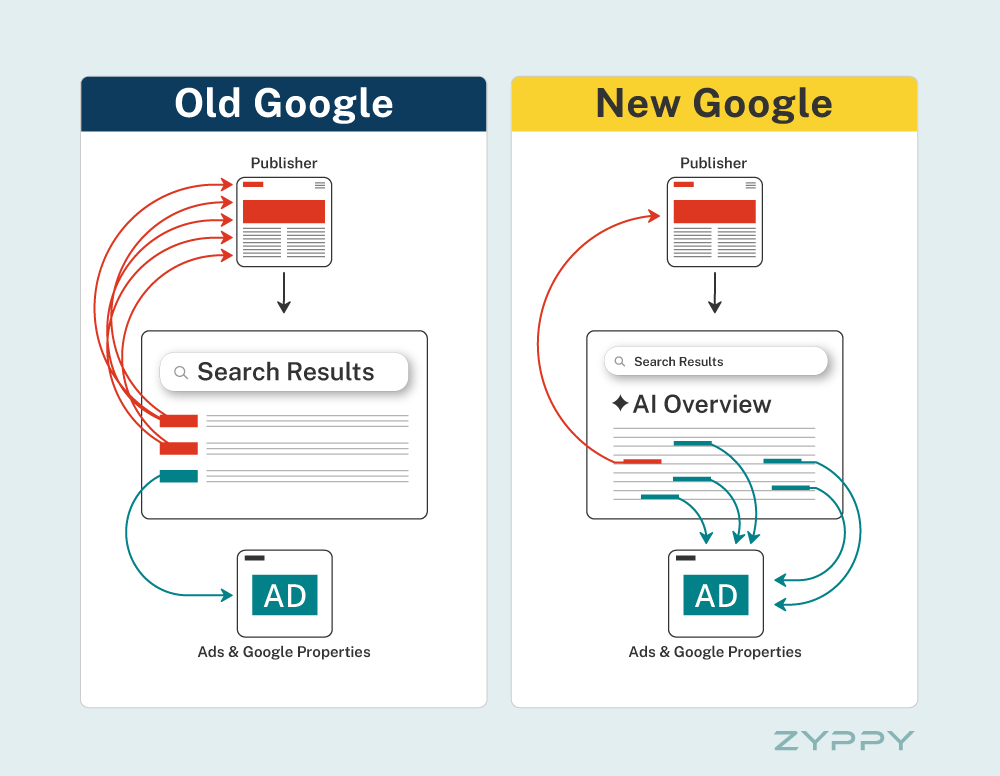

Cyrus Shepard – How AI Overviews Shift Traffic From Publishers to Google (2025-03-08)

- Link: https://zyppy.com/list/ai-overviews-to-google-ads/

- How AI Overviews may divert traffic from publishers to Google itself. The article suggests that AI Overviews could lead to reduced clicks to publisher websites, potentially impacting their revenue and visibility.

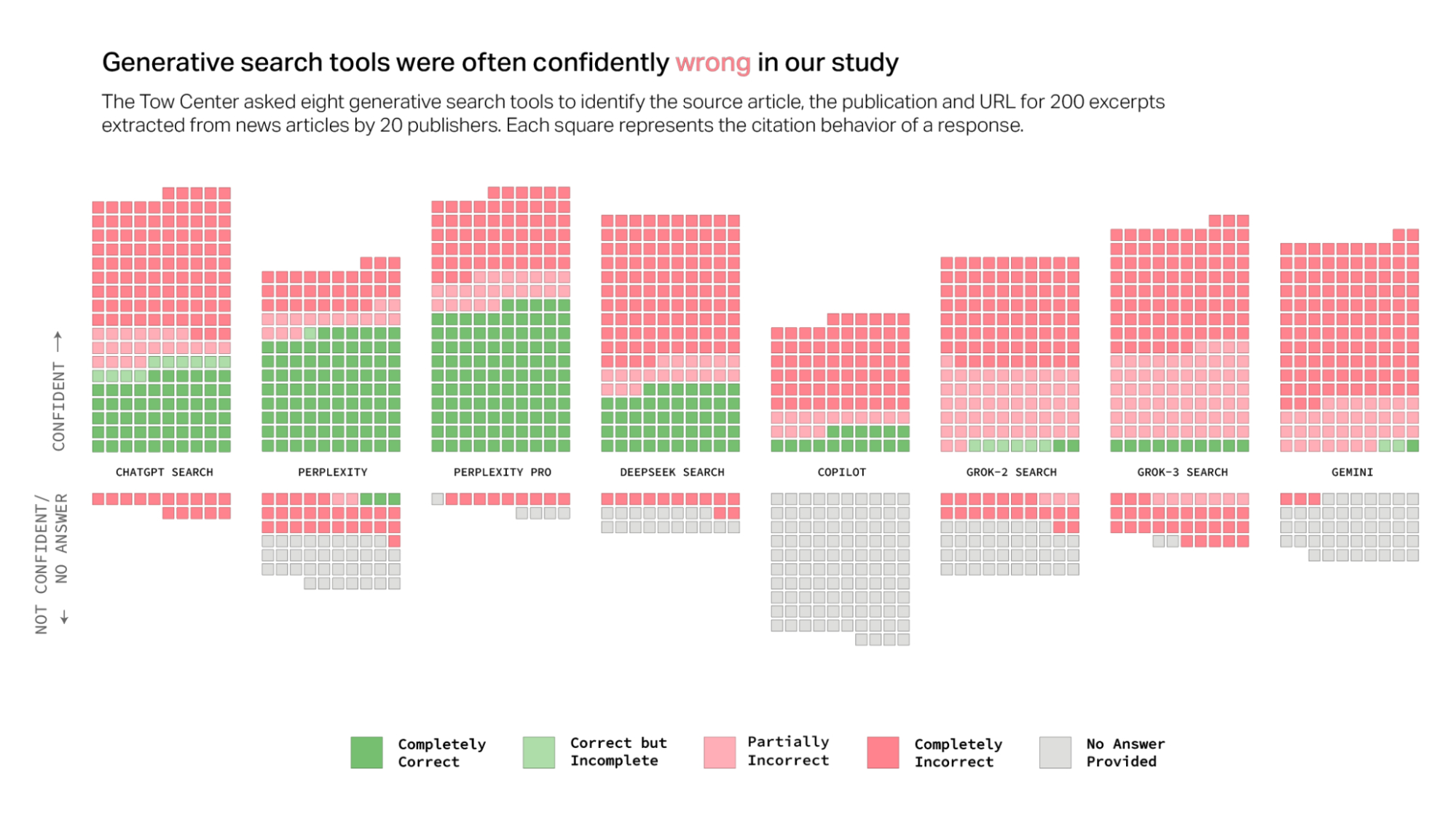

Columbia Jurnalist review – AI Search Has A Citation Problem (2025-03-06)

- Link: https://www.cjr.org/tow_center/we-compared-eight-ai-search-engines-theyre-all-bad-at-citing-news.php

- This study evaluates eight AI search engines and finds that they frequently cite incorrect sources. The findings raise concerns about the reliability of AI-generated search results and their implications for news publishers. Surprisingly – chatbots’ responses to our queries were often confidently wrong. Perplexity Pro is performing the best with a 63% success rate, while Gemini ranks the lowest.

Google – Expanding AI Overviews and introducing AI Mode (2025-03-05)

- Link: https://blog.google/products/search/ai-mode-search/

- Official Google announcement of the expansion of AI Overviews and introduces AI Mode, an experimental feature in Search Labs. AI Mode aims to provide more advanced reasoning and deeper interactions, enhancing the capabilities of AI Overviews.

Growth Memo The impact of AI Overviews on SEO – analysis of 19 studies (2025-02-17)

- Link: https://www.growth-memo.com/p/the-impact-of-ai-overviews-on-seo

- Meta-analysis compiles findings from 19 studies examining Google’s AI Overviews (AIOs) and their effects on SEO. The research indicates that AIOs significantly reduce click-through rates (CTR) for organic search results, with some studies reporting average declines of up to 8.9%. The extent of impact varies across industries, with high-intent queries experiencing smaller reductions in CTR. AIOs often occupy substantial screen space, pushing organic listings further down and diminishing their visibility.

Cressive DX – 10 Essential Insights From Our Google AI Overviews Study (2025-01-21)

- Link: https://cressive.com/key-insights-from-our-google-ai-overviews-study/

- Study analyzing 4,000 search queries (2,000 each from the US and UK) to understand the impact of Google’s AI Overviews (AIO) on search behavior. The study found that AI Overviews appeared in 47% of searches, with minimal geographic differences between the US and UK. Question-based queries were significantly more likely to trigger AIOs (~85%) compared to non-question queries (~43%). Long-tail keywords had the highest incidence of AIOs (~65%), followed by medium-tail (~49%) and short-tail (~34%) keywords. The study also revealed that 97.3% of AIOs cited at least one result from the top 10 organic listings, with positions 1–7 having a higher citation rate (~83–85%) compared to positions 8–10 (~56%). These findings suggest that maintaining a high organic ranking is crucial for influencing AI Overviews and shaping the narrative around a topic.

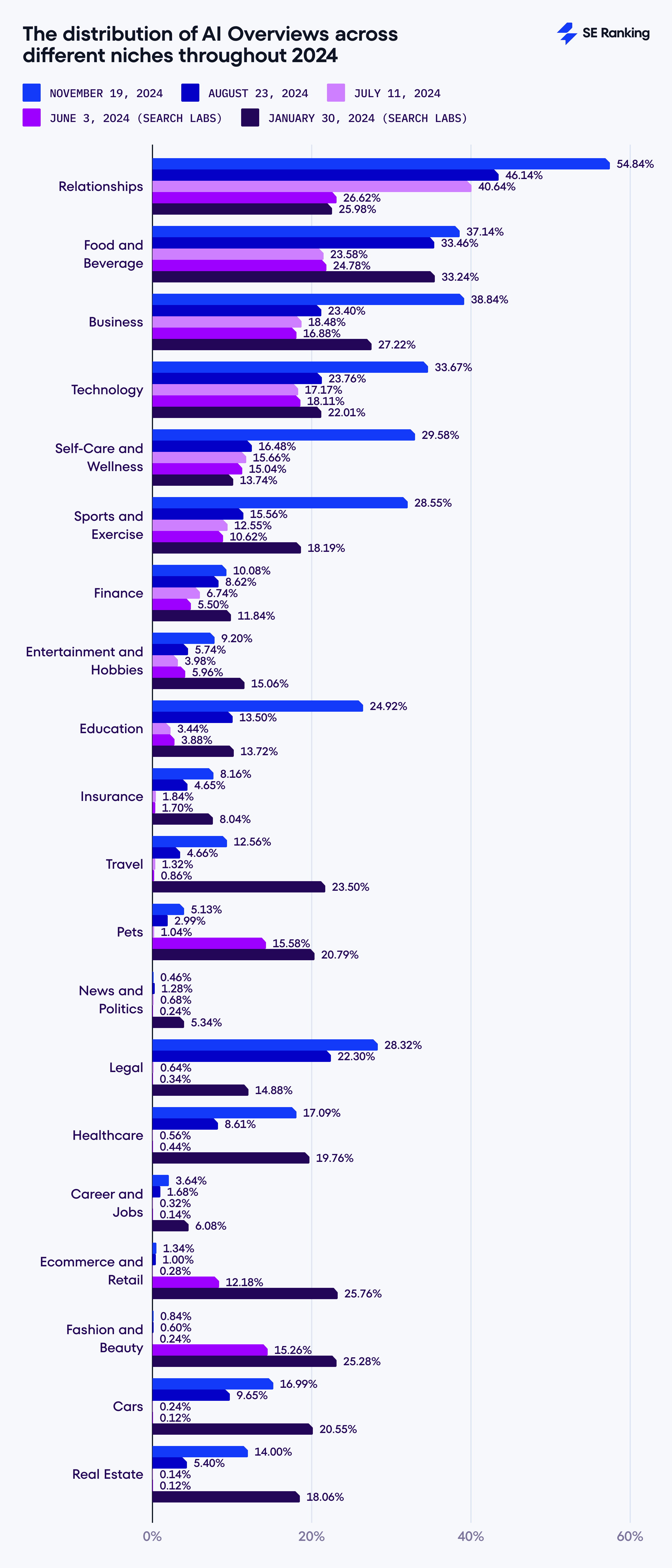

SE Ranking – The State of AI Overviews in 2024: Research Insights and Future Forecasts (2024-12-02)

- Link: https://seranking.com/blog/ai-overviews-2024-recap-research/

- Presents a comprehensive analysis of Google’s AI Overviews feature as of 2024. It includes insights from industry experts discussing the evolution of AI-driven search features and their impact on search visibility and ranking dynamics – including a breakdown of the impact across industries (where you can simply see there is massive dropdown – so simply Google again takes your content and for this effort offer practically nothing as compensation. And we can be quite sure that soon or later we will see again ads somewhere there displayed over your content…

Google everything – Google’s AI can hallucinate. So don’t trust without verification of provided information from AI Overview.

- Link: https://x.com/Goog_Enough/status/1921173382881509885/photo/1

- Cautionary reminder that despite their authoritative appearance, Google’s AI Overviews can still produce false or misleading information — known as AI hallucinations. So always try to critically evaluate AI-generated summaries and not treat them as guaranteed facts or valuable and trustworthy information.