Search Generative Experience (SGE) – What Is It?

The search engine as we know it is undergoing a seismic shift – not through a new interface or ad product, but through the rise of AI-driven search. At the heart of this transformation is SGE, short for Search Generative Experience, Google’s ambitious and controversial experiment to integrate generative AI directly into its search results.

But what exactly is SGE, how does it work, and what does it mean for users, content creators, and the web at large?

Redefining Search Through AI

Launched in mid-2023 within Google’s Search Labs, SGE represents a fundamental rethinking of how users interact with search engines. Traditionally, search has functioned as an index – a curated list of links pointing users toward third-party websites that (hopefully) contain the answers they’re looking for.

SGE, by contrast, flips that model. Using generative AI, Google now attempts to synthesize and summarize answers within the search results themselves, without requiring users to click away. When a user enters a query, SGE generates a cohesive response – often a paragraph or two – that pulls together information from multiple sources across the web. In some cases, it includes cited links below the answer. In others, the AI stands on its own.

To the user, this may look like a helpful, conversational snippet. But to the publishers, marketers, and businesses who have long relied on traditional organic traffic from Google Search, it represents something else entirely: a disintermediation of the web.

How SGE Works?

SGE is built on large language models (LLMs) – similar to those powering chatbots like ChatGPT or Google’s own Gemini. When you type a question or prompt, the system doesn’t just fetch links. It processes your query, interprets the intent, and crafts a synthetic summary of relevant information based on data indexed across the web.

In most cases, Google overlays this AI-generated summary at the top of the search results page, sometimes accompanied by follow-up suggestions like “Ask a follow-up” or “Expand this answer.” The experience feels conversational and streamlined – a departure from the traditional blue-link format that has defined Google Search for decades.

Google describes SGE as an “experiment,” but it’s already shaping the direction of the company’s long-term search strategy.

Implications for SEO and Web Traffic

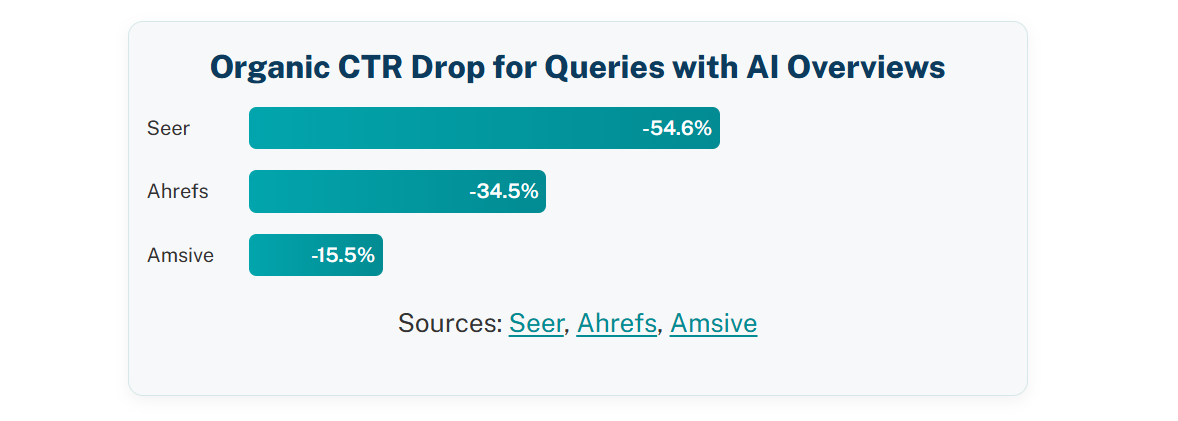

SGE introduces major uncertainty for anyone who depends on Google for visibility – especially content publishers, bloggers, ecommerce sites, and affiliate marketers. Since SGE aims to provide direct answers, many users may no longer need to click through to source websites. That means fewer pageviews, lower ad revenue, and potentially less influence for content creators.

Even more concerning is that not all AI overviews clearly credit their sources, and some generate summaries based on dozens of pages without giving any single site meaningful exposure. For small publishers, this could result in a scenario where their content is used to train or fuel an answer – without receiving any traffic or recognition in return.

This is at the heart of a growing ethical and economic debate. While Google claims its AI models are trained on publicly available data, many publishers argue that SGE effectively repurposes the labor of others – drawing from original reporting, expert analysis, and curated information to generate a free-to-consume summary that competes directly with their own content.

Content and Content Publishers and Content Creators Without Compensation

What makes this particularly painful for publishers is that SGE produces no direct revenue for those whose content it depends on. Unlike a traditional search result, where a well-ranking page could lead to advertising impressions, subscriptions, or product conversions, SGE answers often eliminate the need to click altogether.

From a technical standpoint, SGE is not “creating” new knowledge – it is rephrasing, condensing, and recombining existing information. The intelligence is statistical, not conceptual. While this is useful for efficiency, it raises a fundamental question: if AI is trained on the work of journalists, researchers, educators, and domain experts – and then outputs derivative summaries that draw traffic away from those very creators – who truly benefits?

At scale, this dynamic has serious consequences. It undermines the financial viability of quality publishing, weakens the incentive to invest in original reporting, and may accelerate a race to the bottom where surface-level content dominates because deeper, well-researched work is cannibalized without compensation.

Benefits and Risks for Users

SGE changes how users access and process information, replacing navigation with synthesis. While that offers speed and simplicity, it also reshapes how trust, truth, and critical thinking function in the search experience.

Key benefits:

- Faster access to condensed information – instead of manually visiting multiple pages, SGE provides an AI-generated summary directly in the search results. This is especially useful for users looking for high-level overviews or basic answers, cutting research time down to seconds.

- Improved handling of multi-part or exploratory queries – SGE can respond to complex, layered questions more fluidly than traditional search. For example, a user asking “How does solar energy compare to nuclear in terms of cost and environmental impact?” may get a structured, synthesized answer without needing to read three separate sources.

- Lower barrier to understanding unfamiliar topics – for users with limited background in a subject, or those with reading or language limitations, SGE makes it easier to absorb information without being overwhelmed by technical jargon or long-form content.

- Better support for mobile and on-the-go use – on smaller screens where navigating multiple tabs or scrolling through long articles is frustrating, SGE offers one-screen summaries that improve usability and convenience.

Key risks:

- Lack of source transparency and citation depth – SGE often provides answers without clearly identifying which sources were used or how they were prioritized. This makes it difficult for users to evaluate credibility, spot bias, or verify facts independently.

- Increased risk of misinformation and hallucinations – like all large language models, SGE can confidently generate inaccurate or outdated claims. If the underlying data is flawed or misunderstood, the summary may mislead users while appearing authoritative.

- Over-simplification of complex or controversial issues – nuanced topics (such as climate policy, medical treatments, or historical conflict) are reduced to brief, generalized answers that may obscure key debates, ethical concerns, or minority perspectives.

- Bias toward dominant narratives and data sets – because SGE is trained on widely available and often mainstream content, it may unintentionally suppress underrepresented voices, independent research, or emerging viewpoints that haven’t yet reached algorithmic prominence.

- Erosion of user critical thinking and media literacy – when the AI presents a ready-made answer, users may stop evaluating, comparing, or questioning sources. Over time, this can weaken essential skills like recognizing manipulation, distinguishing opinion from evidence, or understanding the limits of certainty.

- No clear path for correction or accountability – if an SGE answer is wrong, biased, or harmful, users cannot easily report, dispute, or understand how it was generated. Unlike human authors or publishers, AI summaries lack editorial bylines or revision histories.

- Potential normalization of passive information consumption – as users grow used to quick AI-generated conclusions, they may begin expecting all knowledge to be instantly accessible and neatly packaged. This risks flattening the value of in-depth journalism, original research, or detailed reporting.

Structural Consequences for the Web and Society

SGE doesn’t just change how users search – it transforms the economics, incentives, and architecture of the web itself. When search becomes a summary, and that summary is controlled by an AI layer, the ripple effects touch every part of the information ecosystem.

It will also help you better understand the whole topic about the benefits and disadvantages of AI when you read the full article about the biggest AI myths – what artificial intelligence can or cannot do now.

Implications for content creators and publishers:

- Loss of traffic and monetization potential – when answers appear directly in the search results, users are less likely to click through. This erodes ad revenue, subscription conversions, and affiliate income, especially for sites that previously ranked well on informational queries.

- Decreased visibility for small or emerging voices – AI-generated answers often favor popular or frequently cited sources, making it even harder for new, niche, or independent publishers to gain visibility through organic search or paid traffic (as they can afford to promote their content than smaller publishers).

- Increased pressure to write for machines, not humans – creators may feel pushed to optimize content not for quality or clarity, but to align with AI training patterns. This leads to repetitive, formulaic content designed to be scraped or summarized rather than read and appreciated.

- Extraction without compensation – publishers invest time, expertise, and resources into producing original content. Yet tools like SGE summarize, paraphrase, and repurpose that work without consistently attributing credit or offering any measurable return. The result is a growing gap between the effort required to create valuable content and the benefit received for doing so. Over time, that imbalance could lead to a sharp decline in quality content creation altogether – simply because it will no longer be viable or worth the investment to create it. This shift is no longer theoretical – it’s already happening. With SGE, Google has introduced a system AI Overviews (AIO) that, while seemingly helpful to users, further exploits the labor of unique content creators. It extracts information from news articles, blog posts, and expert sources – then rephrases that material into AI-generated content/summaries displayed directly in search results. Users get quick answers without visiting the source – and the original authors receive no traffic, no visibility, and no compensation. And that’s just the beginning. Right now in this super feature, there is no revenue sharing option for those who create all information they use for training and displaying to users. No licensing agreement. No framework to reward the creators whose work fuels the system. This isn’t a technical oversight – it’s a systemic model of extraction. The platform captures and monetizes the value of human creativity, but gives nothing back in return. To put it plainly: creators get absolutely zero. At best, this is an unsustainable model. At worst, it’s a slow erosion of the open web. And this is just the beginning. Once Google begins placing ads directly inside these AI-generated responses – a move the company is already testing – the situation becomes even more paradoxical. Publishers could end up paying Google to reach users with content they themselves produced. Google becomes the summarizer, the gatekeeper, and the monetization channel – while the originators of the content are left invisible. The consequences will be far-reaching. Producing content will become more expensive. Businesses will be forced to spend more on ads to achieve the same visibility they once earned organically. And those costs won’t vanish – they’ll be passed along to consumers, built silently into the price of products and services. The few seconds users save by reading an AI summary instead of visiting a site will be offset by higher prices elsewhere. And all this is unfolding against a backdrop where Google’s traditional search results are already underperforming – often flooded with low-quality, SEO-optimized pages and irrelevant ads. In many cases, SGE isn’t enhancing the experience – it’s compensating for a system that has stopped delivering real value. If this is the future of search, then we must ask: are we improving the user experience, or just masking its collapse behind a smoother interface?

Implications for platforms and institutions:

- Concentration of influence within a single system – with SGE, Google doesn’t just guide you to information – it becomes the information. The platform becomes the default explainer, interpreter, and filter, with little transparency into how answers are assembled or what perspectives were excluded.

- Increased risk of shaping public knowledge through unseen bias – even if unintentional, the AI’s output is shaped by its training data and model design. If the majority of content it learns from reflects a single worldview, that bias will be reflected and amplified in search responses.

- Greater vulnerability to coordinated manipulation – if actors understand how content is ingested and surfaced by SGE, they may design entire content ecosystems around influencing AI outputs – subtly skewing what users see as “consensus”.

Implications for society and public trust:

- A weakening of democratic knowledge structures – when people stop visiting news outlets, research institutions, or specialist platforms, those institutions lose both their audience and their civic role. SGE shortcuts the process of deliberation and dialogue – replacing it with a single, compressed output that can’t easily be questioned or debated.

- Less friction, but also less scrutiny – SGE gives users what they want quickly, but sometimes what users need is discomfort, complexity, or contradiction. Those rarely survive algorithmic distillation. What’s fast and fluent isn’t always what’s fair or accurate.

- A dangerous illusion of authority – users may assume that AI-generated summaries are neutral, complete, and correct. But these answers come from a probabilistic model – not a peer-reviewed source, not a journalist, not an expert. Without visible human judgment, trust becomes misplaced.

What Should Change (not only) in SEG in the Near Future?

If SGE is to remain part of the future of search, it must evolve to serve not only users and platforms – but also the wider ecosystem of creators, institutions, and public knowledge it relies on. A generative system that only extracts value without giving anything back risks collapsing the very web it summarizes.

For Google and AI platform designers:

- Make sources visible by default – every AI-generated summary should clearly display the sources it draws from, and ideally, how those sources were selected or weighted. Without this transparency, users are left guessing whether the information is credible, biased, or manipulated. For example, if a summary on climate change is generated without any reference to scientific consensus or peer-reviewed research, users may be misled by surface-level or controversial takes that sound authoritative. Trust in AI cannot be built on opacity.

- Design fair compensation models for content creators – if a publisher’s work is used to train or inform AI responses, they should receive tangible value in return – whether through attribution, licensing payments, or revenue-sharing agreements. Consider how Spotify or YouTube compensate artists through streaming revenue. A similar structure could be applied to AI: the more a piece of content contributes to AI-generated output, the more credit or payment the original creator should receive.

- Give users control, depth, and traceability – users should not be limited to surface answers. They should be able to click into the summary and explore which sources were referenced, how the interpretation was constructed, and where to go for more detail. For example, a search about vaccine safety should allow users to dig deeper into the data sources and understand whether the summary comes from public health institutions, academic journals, or blogs.

- Flag uncertainty and reflect complexity – not all questions have a definitive answer. AI should indicate when a topic is debated, emerging, or lacks expert consensus. Showing only one confident answer can give users a false sense of certainty. In legal, ethical, or political issues – such as abortion laws, climate policy, or economic models – SGE should make visible the diversity of expert viewpoints, not bury them.

For content creators and media institutions:

- Shift toward layered, defensible content – creators should invest in producing content with original insight, data, and expert perspectives. Content that is shallow, SEO-driven, or templated will be easily replicated and paraphrased by AI. But investigative journalism, niche expertise, and original commentary still hold defensible value. For example, an in-depth report on corruption in a local government cannot be mimicked by an LLM trained on general web data.

- Push for legal and commercial safeguards – current copyright laws were not designed for content that is digested, paraphrased, and reassembled by AI systems. Creators must advocate for updated frameworks that recognize the economic value of their intellectual labor. This may include opt-out mechanisms, licensing protocols, or compensation structures similar to those used in the music industry.

- Strengthen audience awareness around authorship – creators and publishers should clearly communicate how content is produced, who is behind it, and why it can be trusted. Bylines, transparency about funding or affiliations, and editorial standards will help audiences distinguish between AI-generated summaries and original human-authored journalism. In a world flooded with machine-written content, clear signals of authenticity matter.

For users and institutions of knowledge:

- Treat AI summaries as starting points, not conclusions – users should be encouraged to explore beyond the first answer. For example, if SGE provides a summary of the causes of inflation, readers should consider alternative economic theories, policy debates, and historical contexts before drawing conclusions. Critical reading and healthy skepticism are skills that must be retained, not replaced.

- Actively support human-made knowledge – journalism, academia, and creative industries rely on public engagement and funding. Subscriptions to trusted news sources, donations to nonprofit publishers, and institutional support for libraries and research all play a role in sustaining the quality of information online. If AI becomes the default interface but draws from human work, we must ensure those human institutions survive.

- Understand that search is now not neutral (and it was never been since SEO became important for companies, just SGE makes that fact more visible and obvious) – even before generative AI, search results were shaped by SEO strategies, commercial interests, and algorithmic ranking. What SGE changes is the degree to which content is paraphrased, filtered, and editorialized without visibility. When AI outputs a confident summary, it is doing more than retrieving data – it is creating a narrative. Users must recognize that these narratives are influenced by training data, design choices, and omitted context. Being informed today means questioning not only what is shown, but what has been excluded, compressed, or softened by AI design.

Was this article helpful?

Support us to keep up the good work and to provide you even better content. Your donations will be used to help students get access to quality content for free and pay our contributors’ salaries, who work hard to create this website content! Thank you for all your support!

Reaction to comment: Cancel reply