A/B testing is a process that compares two versions of any webpage or app against each other. It is also known as split testing or bucket testing. A/B testing determines the better operator out of the two things you choose to compare. Hence, it is basically an experiment where two or more variants from a page are displayed to users randomly. The experiment is conducted using statistical analysis, determining which variation gave a better outcome for a set conversion goal. Running an A/B test that directly analyzes a variation against a present experience allows you to pose centered inquiries about changes to your site or application and afterward gather information about the effect of that change. Testing removes the guessing aimlessly out of website optimization and enables educated choices that shift business discussions from “we think” to “we know.” Therefore, A/B testing allows you to be more aware and make data-informed decisions by estimating the effect that those changes have on your measurements, you can guarantee that each change may produce positive outcomes.

In the A/B test, you take a page or application screen and upgrade or modify it to make a second form of a similar page. Additionally, this change can be pretty much as basic as a single feature of a headline or button or be a complete finished update of the page. At that point, half of your traffic is shown the first original version of the page (known as the control), and the other half is shown the altered and modified version of the page (the variation).

As visitors see the display of either the control or variation, their engagement with each experience is estimated and gathered in an analytics dashboard and investigated through a statistical engine. Then you may simply decide whether the visitor had a negative, positive, or no impact due to this change in experience.

A/B testing permits people, teams, and organizations to cautiously choose and improve their user experiences while gathering information on the outcomes and end results. This allows them to build a hypothesis and learn better about why certain components of their experiences changed their user behavior. This assessment gives the best insight for a given objective, and their opinion can be refuted and proven wrong through the A/B test about the best user experience.

Besides simply giving short yes or no answers to settle a disagreement, A/B testing helps address an issue. This testing method can be used several times to continually improve a single objective, like changing the conversion rate after some time.

For example, a B2B technological organization might need to upgrade the quality and volume of their sales from campaign presentation pages. A team would attempt A/B testing changes to the feature of headlines, call to action, visual imagery, overall layout, and changing the page’s format to accomplish that goal.

Testing each adjustment one by one helps them pinpoint which changes affected their user behavior and which ones didn’t. In the long run, they can bring together the impact of numerous positive changes that resulted from the analyses to display the improvement of the new experience over the former one.

This strategy for introducing changes to a user experience also permits the experience to be enhanced for an ideal result. It can help take essential steps in promoting the marketing campaign by making it more successful.

By testing different advertisement features, you can realize which variant gets more attention and clicks. By testing the resulting landing page, you can analyze which format change brings more visitors and convert them into the customer the best. The spending on an advertising campaign can get significantly decreased if the elements and factors of each step worked as effectively as possible to produce desired results to gather new customers.

Likewise, A/B testing can be used by designers and product developers to show the effect of new features or changes on a user experience. User engagement, Product onboarding, modals, and in-product encounters would all be able to be upgraded and optimized using A/B testing if the objectives are defined clearly and you have a transparent hypothesis.

Google enables and promotes A/B testing and states that operating an A/B or multivariate experiment demonstrates no actual risk to your site or its website’s search rank. Nonetheless, it is possible to put your search rank at risk if you misuse an A/B testing tool for cloaking. To ensure this doesn’t happen, Google has listed some of the best practices to stay safe:

Following is the A/B testing framework I use:

Your website analytics will help me provide insight regarding where I could start optimizing. By identifying high traffic areas on your website or application, I can speed up the process of gathering data. I will first identify the pages with high drop-off or low conversion rates and then improve that section.

I set the well-defined conversion goals, and the metrics I use to set the standard are better than its previous version. Then I will analyze whether the new variation is more successful than the previous version. The goals I set can be anything from link clicks, product purchase button, or email signups.

After identifying my goal, the next step is generating A/B testing ideas and pointers regarding why I think they might be better than the current version. Once I have a set hypothesis, I list down the most important to least important ideas regarding the impact and difficulty I might face during execution.

A/B Testing software like VWO, Google optimize, and optimizely help me make the required changes to your site or app features. It can range from changing the color of a button or interchanging the elements of a page. I can also hide certain navigation items or design a new customized format entirely.

Mostly, A/B testing tools have a visual editor that guides these changes, and I do the QA of my experiments to produce desired results.

I start the experiment and wait for the visitors to participate. This is the point where visitors to your site or application will be distributed randomly to either your control or variation experience. Their engagement with each experience will be noted and measured to later compare and determine how each performed.

Now that the test is complete, it is time to read the results critically. The A/B testing software will showcase the information from the test and identify the difference in results for both the versions of your page that were compared. It will show how each performed and highlight any significant statistical difference between them.

If the process was successful and the applied variation is a winner, congratulations! It will be useful to use the implemented strategies from the experiment on other pages of your site or app. It will also help to make any iteration on the test to improve your website results.

It varies for each individual, and the cost will comprise the charges for tools and my work – it will differ from one service to another, customer from customer – a few customers need more work to be done (arrangement and everything without any preparation, and to offer directions to designers before I even begin with the A/B testing) and, some needless work. A few customers need full-time commitment, and some need only support of the A/B testing instruments and populate tests when required. It truly depends.

Hence, I will make my services uniquely tailored to your extraordinary necessities and rely on your company’s size and scale. It will, without a doubt, include the number of tasks we need to tackle or improve during my agreement contract.

Therefore, I assess the expense of my demonstrated A/B testing services after a definite review of your task and project. After getting all the essential data about your site/application/project, I can send you the appropriate calculations and total costs of the assessments.

These are four different major testings.

Numerous individuals get confused split URL testing with A/B testing; however, the two are generally different. Split URL testing will be trying numerous versions of your site page facilitated on various URLs. Your website’s traffic is parted between the control and variations, and different conversion rates are measured to choose the best one. The primary difference between a split URL test and an A/B test is that the variations are hosted and facilitated on various URLs in case of a split URL test.

A/B testing is favored when just front-end changes are needed; however, split URL testing is favored when important, and large-scale plan changes are required and don’t have to feel the need to interfere with the existing web design.

Making a split URL test mainly comprises of the following steps:

Multivariate testing is a technique where changes are made to various segments of a website page, and variations are made for all kinds of potential combinations. In this testing strategy, you can test every one of the combinations inside a single test. The multivariate test assists you with sorting out which component on a page has the most effect on its conversion rate. It is more complicated than A/B testing but is most appropriate for cutting-edge marketing experts.

For example, you choose to test two versions of every hero picture and the CTA button tone and color on a site page. By utilizing MVT, you can make one variation per hero picture and one for the CTA button color. To test every one of the forms, make combinations of the multitude of variations.

After beginning a multivariate test on all combinations, you can utilize the data to figure out which combinations impact your page’s conversion rate the most and convey that result.

Multipage testing is a type of experimentation where you can test changes to specific components across different pages.

There are two different ways of doing multipage testing. First, you may take all of your sales funnel pages and then make new versions for each of them. This is called funnel multipage testing. Second, you can test how the expansion or decrease of the repetitive element(s), like security badges, testimonials, etc., can affect conversions across a whole funnel. This is called classic or conventional multipage testing.

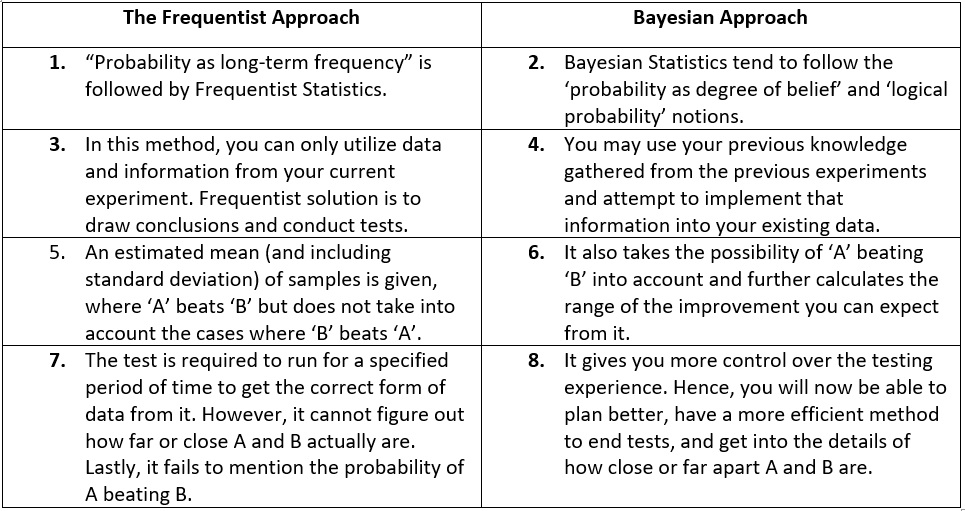

Apart from the two types we’ve just discussed, there are two additional diverse statistical ways to deal with testing: Frequentist and Bayesian.

A frequentist approach defines the probability of an event with a connection to how often a specific event happens in a large number of trials/data points. When applied to the A/B testing, one can see that anybody going with the frequentist approach would require more data to get the desired results. This is something that restricts you from increasing any A/B testing effort.

As per the Frequentist approach, it is crucial to define the duration of your A/B test’s length based on sample size to get accurate test conclusions. The tests depend on the fact that each experiment can be repeated as many times as possible.

Following this technique calls out a ton of attention for each test you run because you’ll be forced to run longer tests for the same set of visitors than the Bayesian approach. Therefore, each test or experiment should be treated carefully because there are only a couple of tests that you can run in a given time span. In contrast to Bayesian statistics, it is less spontaneous and frequently proves to be harder to grasp.

Then again, Bayesian statistics is a theory dependent on the Bayesian translation of probability, where probability is communicated as a degree of faith in an occasion. In basic words, the more you are aware of an event, the better and quicker you can foresee the end results. Other than being a fixed value, the chances are that Bayesian statistics can change as new data is collected. This belief might be founded on past data like the conclusions of past tests or other data about the event.

Comparing to the frequentist approach, the bayesian technique gives remarkable outcomes practically 50% quicker than the older frequentist strategy while focusing especially on statistical significance. You are provided with sufficient information at some point, and the bayesian method gives you the probability of variation ‘A’ having a lower conversion rate than variety ‘B’ or the control. It doesn’t make some defined period limit connected to it, nor does it expect you to have a detailed understanding of the information regarding statistics.

In the most basic terms, the bayesian approach is how we approach things on a regular day-to-day basis. For example, you lost your cell phone in your home. As a frequentist, you would just use a GPS tracker to follow it and check the area the tracker is highlighting. While as a Bayesian, you will utilize a GPS tracker and check every one of the spots in the house you previously tracked down your lost cell phone. In the former, the occasion is viewed as a fixed value, while in the latter, all past and future information are used to find the cell phone. To get a clearer comprehension of the two, check out the comparison below.

Whenever you have picked one from both of these types of approaches based on your site’s requirements and business objectives, start off the test and hold tight for the specified time for accomplishing statistically important results. Remember that regardless of which technique you pick, your testing strategy and statistical accuracy will decide the final results. For instance, one such condition is the circumstance of the test campaign. The period and length of the test must be on point. Figure out the test length remembering your normal day by day and month to month guests, calculating current conversion rates, a number of variations (including control), minimum anticipated conversion rate, percentage of visitors(included in the test), etc.

The body of your site needs to clearly state what the visitor is getting – what’s coming up for them. It needs to likewise resonate with your page’s headline feature. An elegantly composed headline and body can build the odds of transforming your site into a conversion magnet.

While creating content for your page’s body, remember these two boundaries:

The headline of your website or app is the first thing that catches the attention of your visitor. Hence, your headline is the key to your first impression in your visitor’s eyes. And so, the first impression is what will determine if the visitor will choose to proceed and go through your conversion funnel further. This is what makes it essential to be extremely careful about the copying, writing, styling, and formatting of your website. It needs to be ensured that your headline catches the visitor’s attention as soon as they visit your website. It needs to short, clear, and to the point explaining what the product or service is or along with its benefits mentioned. Various fonts, sizes, copy, and messaging can be explored with A/B testing.

Since everything appears to be so fundamental, organizations now struggle with discovering just the most essential elements to keep on their site. With A/B testing, this issue can be settled for the last time. For instance, as an eCommerce store, your item is critical from a conversion point of view. One thing without a doubt is that with technology and its advancements in its present stage, customers like to see everything of top quality prior to purchasing it. Hence, your product page should be in its most upgraded form in terms of design and format. Alongside the copy, the layout and design of a page have pictures (product pictures, offer pictures, and so on) and recordings (product recordings, demo recordings, commercials, etc.). Your product page should answer the questions of your user’s inquiries without confusing them and without getting jumbled:

Make sure that your potential buyers do not get confused with your writing style. Do not use difficult vocabulary, which they don’t understand, and keep the sentences simple and easy to understand.

Tags like ‘only 3 are left in stock’, or ‘offer ends in 1 hour and 10 minutes’ countdown, or festive offers, and exclusive highlight discounts, etc., are very helpful. This helps push the customer to purchase as soon as possible.

According to the products you sell, it’s essential to find creative methods to provide your customer with clear and concise information regarding product descriptions. This is done in order to ensure that potential buyers do not sway away due to inadequate information or your site or web. They should be able to find answers to their questions smoothly instead of getting confused by an unorganized copy. Copies must too, be clear and coherent, and have visible size charts, color tones, etc.

Both good and bad reviews should be listed about your products on your page. Credibility is added to your product and page when negative reviews are present too.

To find out the maximum optimized model of these necessary pages you may have to use A/B testing. Pages like ‘home page’ and ‘landing page’ specifically need to be on the mark. Make space in your pages with insights gained from click-maps to check the clicks and pick out distractions. Another important task is to test ideas like including more white areas and featuring products, high definition images, using videos rather than pictures, or checking out exclusive layouts. It is more likely that visitors can without difficulty and quickly find what they’re searching for if you have home pages and landing pages that are not cluttered.

The most important component with regards to conveying an incredible user experience would be your website’s navigation, and A/B testing can optimize this element of your website. The home page is the parent page from which any remaining pages arise and connects each of those pages; hence your site’s navigation begins on the home page.

It needs to be guaranteed that your structure is clear enough to provide customers with what they are searching for and that they don’t get lost due to broken navigation. It will make your site more attractive and engaging if each click guides visitors to their desired page. In short, you need a reasonable plan for your site’s structure regarding how various pages will be connected to one another and respond inside that structure.

A/B testing can experiment with different colors, placements, copies, and sizes, etc. for your CTA until you have figured out what the best variation for your page is. The CTA covers whether the customers finish their purchase and convert or whether they fill the signup form or not. It goes through all options that directly affect your conversion rate. Next, you can test the winning version again to further optimize your results.

Every form needs to be made in a unique way to cater the targeting audience. Potential visitors find a way to connect to you through using forms as mediums and it becomes much more significant if the forms are a part of your purchase funnel. Similarly, longer forms may do well for your lead quality, even if some organizations prefer shorter forms. You need to decide what kind of form would best suit your audience whether it is longer, shorter, less, or more detailed.

Another helpful tip is to perform form analysis to figure out which style works best for you by trying out different research tools and methods. If an error or potential threat is found, that would be an opportunity to work on improving your form.

A/B testing can help you determine the impact of social proofs and whether it is a good idea for your page or app. It can also help with identifying exactly what kind of social proofs you should add including its layout or placement. Social proofs may range for celebrities or the visitor themselves, or even be testimonials, media mentions, badges, and awards, etc and they are a helpful resource to any webpage. These act as a credible presence that validates your website and its claims; this can be categorized as recommendations and reviews that have been recommended by experts of specific fields. It is commonly used and viewed by visitors that interact with the page.

As each A/B tool can be appropriate for various businesses, it is hard to say which one is best. There are a few of them Optimizely, VWO, Google Optimize, and numerous others. Each tool has various features, pricing, different level of support, having or missing some important tools, etc.

So, choosing the tools for A/B should only be possible solely after the definite survey of your prerequisites. Once you have a brief understanding of what you need to test, what is the scope – the number of pages/visitor that will be required for A/B tests, the number of A/B tests that will be running, and the time constraint or span we have, will determine the final cost.

You should run your A/B tests to accomplish measurably critical results; this implies that it can require days for certain activities, but for some, it can require weeks or months. It truly depends; that’s why there is no particular way to answer this. A solid testing tool should alert you when you’ve gathered sufficient information to have the option to reach complete conclusions.

There are certain factors like how much traffic your site gets, conversion rates, and from which channel (you need to test it on your primary lead generation advertising stream channel). A great testing tool will help you to draw a reliable conclusion once you’ve collected enough data. Once you reach to a conclusion, another point to remember is to refresh your site with the ideal variation(s) and eliminate all elements of the test straightaway, like testing contents, substitute URLs, or markup.

To begin with,

Each form can have absolutely distinct results. For example: If you attempt to conduct an A/B test on online forms for loans taken from the bank and get 33% of customers to fill in the lengthy model of the form; whereas if on the other hand, you provide every other third of the customers with the new “lighter” model of the bank loan form today. Then, customers could insert their phone number and the preferred loan quantity and later be contacted through the call center.

Finally, to the last section of customers left, you may attempt to show every other customer a version of the self-serve online form where the consumer can get his ID scanned and will not need to visit the bank personally. Hence, all these experiments will display different results.

Therefore, when you wrongly pick a hypothesis, your business objectives can be greatly impacted. If one of the tested variants performed very differently than you expected, the results can also greatly differ. So it is important to keep in mind that testing will impact the results already while conducting the A/B test.

Test outcomes can help you develop significant insights and help you form the next test more efficiently, irrespective of the results being good or bad. You can get extra information about different kinds of errors when dealing with the maths of A/B testing. Hence, your intuitions or personal views should not make way to formulate a hypothesis or even while you are deciding the A/B testing goals. It is important to know that whether the test passes or fails, you should allow it to completely run through its whole process in order for it to reach its statistical significance.

It may seem simple to understand that the A/B testing process includes randomly dividing incoming visitors’ sites among multiple versions of a specific website to gauge which one positively affects the necessary metrics. However, while A/B testing may sound simple, how it is carried out and how results are calculated can get tricky to work with. You may possibly never be 100% sure of the accuracy of the results you obtain. Statistics is an essential aspect of A/B testing, and records are primarily based on calculating probabilities. Hence, it is also unlikely that you’ll be able to reduce the risk. Instead, you could try to increase the opportunity of the test’s end result being true.

Next, even after following all of these steps, your test reports may be displayed wrongly because of some errors. Type I and Type II errors subtly make their way into the process, and those basically result in a wrong conclusion of the test. Additionally, misinterpretation of reports can portray results unfairly and eventually lead to a big problem. It might even cost you more conversions or expenses and mislead your entire optimization program.

Now I’ll discuss what Type I and Type II errors mean and what their consequences are. Furthermore, I will also cover how we can avoid them.

The type I error makes it seem like your test is succeeding and your variation is causing an impact. However, the drop or uplift is not real in cases like these.

Type I errors are also known as ‘ Alpha ‘(α) errors or false positives. The probability of making a Type I error is denoted by ‘α.’ This situation occurs when you finish up your test before arriving at statistical significance. The impact (whether negative or positive) is only temporary and only lasts for a short period once the winning version is executed.

If you rush into the pre-decided criteria and dismiss your null hypothesis, you will make this error. The null hypothesis assumes that there will be no impact on the given objective after the test is finished. When Type I errors occur, the null hypothesis is rejected even though it is true due to the test being cut short or miscalculated.

The Type I error can be correlated to the degree of certainty or confidence you choose to finish your test. For example, what is implied here is that if you decide to end your test at a 96% confidence level, you are assuming that there is a 4% probability that your test result isn’t right. Likewise, if that confidence level is 98%, the probability of the test result being wrong is 2%. Furthermore, you might still consider it your bad luck if you run into an α error after closing your test at a 96% confidence level, which means that an event just had a 4% probability.

Suppose you increase the number of signups in your hypothesis by shifting your landing page CTA to above the fold. Then the null hypothesis here would have no visible impact after changing the positioning of the CTA on the number of signups received. It is important to consider that once the test starts, you need to let it complete; you should not be interrupting it out of curiosity. For example, if you notice in between the test, the variation has generated a 60% uplift within seven days, and you end the test because you believe you have the results you needed. Then, you decide to implement the variation and dismiss the null hypothesis. It is possible that a similar impact will not be produced, and it is also possible for you to not witness any impact at all. However, this scenario will mean that your test results have been disturbed due to the type I error.

It’s difficult to completely get rid of the chance of running into a Type I error; however, you may be able to decrease it. When your tests arrive at a level where you’re surely confident it is high, you may close them. Normally, it would be ideal to reach a 95% confidence level, but the outcome can be changed if a type I error occurs. However, you should focus on arriving at a 95% certainty level, and an additional guarantee can be achieved if you let the tests run for a longer period. When a decent sample size has been tested accordingly, then you may expand the validity and credibility of your test outcomes.

After experiencing a type II error, people tend to feel demotivated or abandon their tests. Lack of motivation to carry on with the CRO roadmap while disregarding your own efforts thinking that the test had no impact is a likely outcome after coming in contact with a Type II error.

‘β’ stands for the probability of making a Type II error (otherwise called Beta (β) error or false negatives). The possibility of not running into a Type II error is meant by 1 – β and is subject to the test’s statistical power. The higher the statistical power of your test, the lower will be the probability of coming in contact with a Type II error. In a scenario where you are running a test at 92% statistical power, there is only an 8% possibility that you may wind up with a false negative.

When dealing with type II errors, if a specific test is inconclusive or unsuccessful, and the null hypothesis is shown as true. While experiencing a type II error, you end up mistakingly accepting the null hypothesis and disregarding your hypothesis and your variation. This happens because the variation tends to affect the ideal objective, but the outcome and results do not show it. The results indicate that the null hypothesis was true. A test’s statistical power depends on sample size, the statistical significance threshold, the number of variations on a test, and possibly even the minimum effect of interest.

For example, suppose I want to help decrease the percentage of drop-offs at a particular stage. I assume that adding security identifications or badges on your payment page would help you reach the result you want. In that case, you may decide to make a variation of the payment page with the security badges and run your test. You sneakily check after ten days that no impact has been made, and based on that, you decide that the null hypothesis was indeed the correct one. You assume that no visible change in conversions or drop-offs can only mean that the null hypothesis was true.

However, now you try again and re-run your test since you were not convinced with the previous test’s conclusions. This time you let it run for longer and eventually notice a huge improvement in your conversion objective. Therefore, what you experienced in the first run was a Type II error by closing the test before the necessary sample size might have been tested. In a case like this, where the requirement isn’t met, you might witness no impact at all due to a Type II error.

The answer to this would simply be to improve the statistical power of the tests you run, and this can be made possible by expanding your sample size and diminishing the number of variants. Furthermore, an improvement can be made by increasing the probability of Type I errors by decreasing the statistical significance threshold. However, normally it is more important to reduce the chances of Type I errors rather than Type II errors as the type I error is more difficult to deal with. Just for the sake of improving power, it wouldn’t be advised to make changes or interfere in the statistical significance threshold. As a test owner, your main goal would be to conduct your experiments to determine what the best results are; you should not have to focus on the statistics.

In the case where a test is concluded before reaching statistical significance, it is possible to end up with a Type I error or a false positive. The end result of a test is completely dependent on achieving statistical significance according to the frequentist approach towards inferential statistics.

The probability that a variation will beat the control along with the possibility of a loss that might occur is calculated when implementing this. The bayesian model powered statistics engine shows you the possible loss you might experience when implementing a variation. It helps make you aware of the potential loss you may face if you implement the particular variation. A test is ended, and the variation is announced or declared the winner just once the possible loss of the variation is under a specific threshold. This threshold is controlled by considering the conversion change of the control form, the number of visitors that were unknowingly participating in the test, and a constant value.

Not just any smart statistics tool can assist you with decreasing your testing time by half because there isn’t a pre-decided reaching set time and sample size that indicates when to end your test. However, it also gives you more control over the experiments you conduct. Your decisions can be based on the kind of test you run, as you’ll have a defined probability. To begin with, if you decide to test a small detail such as changing the color of an icon and 92% probability indicates that it is a good choice to make, then you may deploy the change. On the other hand, you test the last step of the funnel and choose to wait for it to become a 99% probability. You may increase the testing velocity by comparing low-impact test results faster and prioritizing the high effect ones in your process.

A Frequentist-based statistics model will likely give you the probability of seeing a distinction in variations by accepting that it is an A/A test. This method anyway accepts that you are doing the test calculation solely after you have gotten adequate sample sizes. Shrewd details anyway don’t expect to be this and enable you to settle on a better business choice by lessening the probability of running into Type I and Type II errors. This is because it gauges the probability of the variation beating the control, and the potential loss related to it, permitting you to consistently screen these measurements while the test is running.

You can’t totally kill the chance of your test outcomes being skewed because of an unforeseen error, as focusing on perfect sureness or certainty is incredibly difficult with statistics. Nonetheless, by picking the right tool, you can bring down your odds of making mistakes or decrease the risk related to these errors to a satisfactory level.

We often try to rush through the testing process to get quick results, but industry experts never run too many tests simultaneously. Another important thing to be noted is that the more elements you decide to test, the more chances there will be for the need of site visitors or traffic on that web page to justify statistical significance. Testing too many factors of a site together makes it tough to pinpoint exactly which element impacted the test’s success or failure. Thus, prioritization of tests is crucial for successful A/B testing.

It is likely that at times you are advised to change your signup flow because someone else did it and it resulted in a 40% increase in their conversions. However, their traffic and the audience they are targeting are different from yours. The reason why you should not rely on someone else’s way of optimization is that there’s a possibility that their audience is much more diverse than yours. Their method may have also been different and since no two websites are identical, what worked for you won’t work for them.

A hypothesis needs to be created in A/B testing before a test is performed. Next, you will need to figure out why should something be changed, why does it need to be changed, what is the expected end result, and many more questions for the next steps. The possibility of a successful test decreases if you follow a wrong hypothesis.

A/B tests should be run for a certain amount of time until it reaches statistical significance and that depends completely on your traffic and goals. A test may fail to give you correct results if it kept running for too long or for barely any time at all. Insignificant results slow down the process and demotivate testers from re-running to find the winner. If one version of your site is displayed as the winner then in the initial days of starting the test you should not make a conclusion regarding it actually being the winner. A common mistake that organizations tend to make is that they let their campaign run for longer than needed and so the duration for which you run the test needs to be appropriately decided. Existing traffic, expected improvement, or existing conversion rates are some important factors upon which your test duration relies.

Most organizations tend to give up after their first test fails, which isn’t the way to go if you want to succeed. In any case, to improve the odds of your next test, you should be learning from the experiences from all your previous test while arranging and conveying your next test. The more tests you conduct, the more you learn about how to reach an optimization point; A/B testing is an iterative cycle, with each test expanding upon the aftereffects of the past tests. This improves the probability of your test being successful with genuinely better results.

Additionally, test every element clearly to deliver its most successful optimized version, regardless of whether they are a result of a winning test. Furthermore, don’t quit testing after a successful one because that would be careless.

To get significant results by A/B testing, you must wait for relevant traffic. Businesses often end up testing unbalanced traffic, which doesn’t give them clear results. The chances of your campaign failing or generating irrelevant or inconclusive results increase if excessively higher or lower traffic is used.

Using wrong tools definitely hinders and slows down your site; they make it harder for your site to function smoothly. While other tools are not firmly integrated with important qualitative tools such as meeting recordings, heatmaps, etc., which leads to data deterioration. Many cheap A/B testing tools are on the rise, and all of these tools don’t tend to be similarly acceptable as A/B testing with defective tools can possibly harm your test’s success rate from the beginning.

If you intend to redo one of your site’s pages completely, then you should utilize split testing. In the meantime, if you wish to also test a series of permutations of CTA buttons, their tones, the text style, or the picture of your page’s headline banner, then you must implement multivariate testing. Your experimentation process should be started by running smaller A/B tests on your site to get the hang of the whole cycle, as marketers recommend. Sticking to the plain vanilla A/B testing strategy for the long run will not help your business that much.

If you compare the site traffic on days it receives the highest traffic against the day it receives the lowest rate of traffic then it is wrong. Tests need to be run in periods that are comparable in order to produce impressive results. There are certain external factors such as holidays, sales, and so on that affect the process. The reason behind this is that the comparison is not based on likes. Hence, the probability that you reach an insignificant result increases.

You may face a few challenges when choosing to pursue A/B testing. Since A/B testing can produce a large and positive ROI, it assists you in guiding your marketing efforts. It highlights significant elements by pinpointing the problematic areas. The six primary challenges are mentioned below.

Elements cannot be tested based on choice, and oftentimes all small changes that are easy to implement are not the right choices for your site. When it comes down to your business goals and objectives, then finding the right choice that will actually provide you with significant results is important. When dealing with complex tests, the data of your website and visitor analysis should be taken into consideration. Data points like these help to overcome the challenges that come with deciding what to test. It helps you figure this out outside of your limitless backlog by highlighting the elements that may potentially have the highest effect on your conversion rates by sending you to pages with the highest traffic.

Formulating a hypothesis is in line with the first challenge; it is important to have scientific data available here. If you test without the correct data, then you’re gambling away your business. From the data collected in the first step (i.e., research) of A / B testing, you need to find out the problems with your site and formulate a hypothesis. This is only possible if you follow a well-structured and planned A/B testing program.

Statisticians understand how to do this differently than marketers as they are better trained in this aspect. Often, we tend to be running after faster results to move on. However, sample sizes are an integral part of the process and need to be selected carefully. To be specific, the size and whether how large it is supposed to be based on your website’s traffic needs to be clarified.

It is normal to find either success or failure at every step of the way. This challenge will elaborate on both failures and successes in the process of A/B testing.

Markets may often have a difficult time explaining to their team about the failed tests. They might also have a hard time interpreting what needs to be done with the conclusions that came from the tests. It is necessary to remember that a failed test is only unsuccessful when you cannot learn from it. The mistake most marketers make is that they do not go back to the tests that failed. Failed tests are similar to building blocks that help you build your way to success. All data that is gathered from any test is worth learning from.

Furthermore, the problem of limited knowledge that isn’t enough to know how to analyze the gathered may result in data deterioration. You can endlessly scroll through sessions recording data or heatmaps data if you do not have the right process in mind. Additionally, if you are trying out different types of tool and their implementation then there will be increased chances of data leakage. Also, it will be difficult to gather any meaningful insights if you don’t know how to make proper use of the data you’ve gathered.

If you have run two tests and they were both luckily successful with statistically significant results, then naturally, you would think that now you can implement the winner, but there’s more to come after that. Most marketers find it difficult to analyze the results and conclusions from the tests. This part of the process is extremely important because the success of the test might need to be replicated in the future, and so you need to know why your test succeeded. To answer fundamental questions such as why they responded to one version better, why the customers pick the choices they picked, and the reason behind their behavior can help you gain insight. If marketers can figure out the answer to these fundamental questions, they can learn to analyze test results and have helpful inputs for future tests.

CRO and A/B testing programs are an iterative process, and that is their most vital feature, which in some cases is a cause of concern for most organizations. A cycle should be formed comprising research as intensively done as possible for the optimization to be beneficial. Businesses tend to rarely use A/B testing because of a lack of sufficient resources. This challenge doesn’t simply stay limited to putting in efforts or having enough data; figuring out your future testing culture is incredibly important.

The responsibility of committing to the test that you begin is important, and it is also essential to not edit or change your test goals. Changing the experiment setting or fidgeting with the design of the control or variation is risky while the test is running. Furthermore, the traffic allocations to variations should not be changed as this alters the sampling size of the visitors that return to your page. Lastly, these points are important if you do not want to skew the test results you receive.

It can be said that A/B testing produces a high return on investment (ROI) even after all these challenges and looking at all the evidence in support of it. Elements are tested based on intuition or preferences randomly, which is risky and tends to be the case where marketing teams don’t use A/B testing programs.

A/B testing makes the work more predictable and removes uncertainty or guesswork out of the process from a marketing view. A marketing strategy can be created efficiently for your website with clearly defined ends, as through A/B testing, only data-driven decisions are made in strategic marketing. Therefore, the first three challenges can be solved easily if you gather an adequate amount of visitor data to analyze and a good website. You may prioritize your backlog and not even have to decide what to test with the extensive website and visitor data available to you.

A hypothesis is important and needs to be formulated thoughtfully. However, with enough data and business experience, a working hypothesis is something you’ll be able to come up with in no time. You will need to go through the data available and choose the exact changes that will give you your desired end goal. Lastly, you can calculate the sample size that would fit your testing campaign using any tool you find helpful in overcoming the third challenge.

If you’re treating A/B testing as an iterative process, then you may be able to cover the fourth challenge; the last two challenges are related to the approach you follow with A/B testing. Furthermore, you may hire professionals from the field to train you and your team to research data and measure results accurately; this would help you solve the rest of the challenges. Hence, the right approach to tackling the final challenge is to divert your resources into the most critical elements of your business and plan your testing program so that you can work with limited resources.

A/B testing needs to be part of a larger complete CRO program and should be addressed other than an isolated optimization exercise. Prioritization and planning need to be part of an efficient optimization program; however, it is important to note that testing your website is not a part of a common CRO practice. Therefore, the only way to conduct this is to have real-time visitor data and plan out the process thoroughly and carefully.

To put it simply, analyzing your current website data and analyzing it is required. Next, you will need to collect visitor data to create a backlog of action items that are dependent on that acquired data. Insights are drawn to use in the future by prioritizing and testing everything you prepared.

There are four primary stages that a well-functioning testing calendar or a good CRO program would require. As marketers, it is possible that you would want to scale your A/B testing program and execute ad-hoc based tests as many as needed to make the work more refined and structured. To start with this process, you need to create an A/B testing calendar.

This stage includes calculating your site’s performance in terms of how visitors are responding to your site. Furthermore, this stage is the arranging and planning phase of your A/B testing program. In this stage, you may have the option to sort out what’s going on your website or even question why it is happening and how visitors respond to it. Suppose an online headphones store is taken as an example. In that case, we can assume that the business objective for this store is to expand income by increasing specifically on the offers, online requests, and deals. The KPI set to track this goal would then be the number of headphones sold.

You need to be sure about your business goal, and devices like Google Analytics can help you measure your goals. Make sure that when you have clearly defined goals, set up GA for your site and characterize your key performance indicator.

All that goes on in your site needs to be aligned with your business objectives. However, this phase doesn’t just end with characterizing site objectives and KPIs. It likewise incorporates understanding your clients. I have effectively examined the different tools that can be utilized to accumulate visitor’s behavior information. Whenever information is gathered, note down observations and begin arranging your plan from that point onwards.

The time has come to set up a backlog when your organization’s goals, site information, KPI, and user behavior information are set to be analyzed.

Your backlog should need to be a comprehensive rundown of all the elements of your website or app that you choose to test depending on the data you analyzed. With an informed, data-backed backlog prepared, the follow-up stage is figuring a hypothesis for each backlog. Furthermore, with the gathered data in this stage and its analysis-ready, you will have a sufficient understanding of what occurs on your site and why, so form a hypothesis based on them.

For example, once you’ve analyzed the data in the first stage using qualitative and quantitative research tools, you’ll analyze that not having the various payment methods leads to the potential visitors dropping off on the checkout page. Therefore, you’ll hypothesize that adding multiple payment method will help to reduce the checkout page drop off.

The following stage includes focusing on your testing opportunities. Having multiple hypotheses can be tough; hence prioritizing helps scientifically sort that out. At this point, you need to be completely aware of site information, visitor information and be sure about your objectives. With the backlog, you arranged in the initial stage and the hypothesis prepared for every candidate, you are almost on the way to your optimization roadmap. Therefore, now comes the essential task of this stage: prioritizing.

In stage 2, you need to be completely prepared to distinguish the problematic areas of your site and breaks in your funnel. Yet, only one out of every action area has equivalent business potential. So it gets basic to weigh out your backlog participants before picking the ones you need to test. You should remember a couple of things while focusing on things for your test campaign like the potential for development, page worth and cost, the significance of the page from a business viewpoint, traffic on the page, etc.

Yet, how can you guarantee that no subjectivity makes its way in your prioritization system? Would you be able to maintain 100% clarity and objectivity consistently? As a human being, we give a lot of significance to intuitions, sincere beliefs, thoughts, and qualities in light of the fact that these are the things that help us in our regular day-to-day existence. In any case, CRO isn’t regular daily life. It is a logical and scientific process that needs you to be rational and settled on data-informed supported choices and decisions. The most ideal approach to get rid of these subjectivities is by receiving a prioritization framework.

There are numerous prioritization frameworks that even specialists tend to use to figure out their immense backlogs. On this page, you will find out about the most well-known systems that most marketers use – the CIE prioritization structure, the PIE prioritization framework, and the LIFT Model.

There are three parameters of CIE prioritization framework on which you should rate your test on a scale of 1 to 5:

Three things that need to be addressed firstly are;

Well, by understanding your audience, you can make an educated guess on whether the hypothesis will address the users’ fears and questions and push them to convert over or not. Therefore, prototyping the user’s persona you are targeting can assist you with deciding the capability of a hypothesis.

Next comes deciding the ease of executing your test. These are few questions that you may have to address. For example, “Would it require a great deal of planning on your part to execute the hypothesis?”, “What is the amount of effort needed to plan and build up the solution proposed by the hypothesis?”, and “Could the changes recommended in the hypothesis be carried out by using only the visual editor, or does it require adding custom code?”. It is only after you have addressed all of these and other related questions will help your backlog candidate on the easing parameter.

A hypothesis worked around the checkout page holds a higher significance than the one worked around the item features page, for instance. Your site might be drawing in visitors in huge numbers, yet not all visitors become purchasers. Similarly, not all visitors decide to convert. Furthermore, this is on the grounds that visitors on the checkout page are directed somewhere down in your conversion funnel and have a higher possibility of converting over than visitors’ on your product features page.

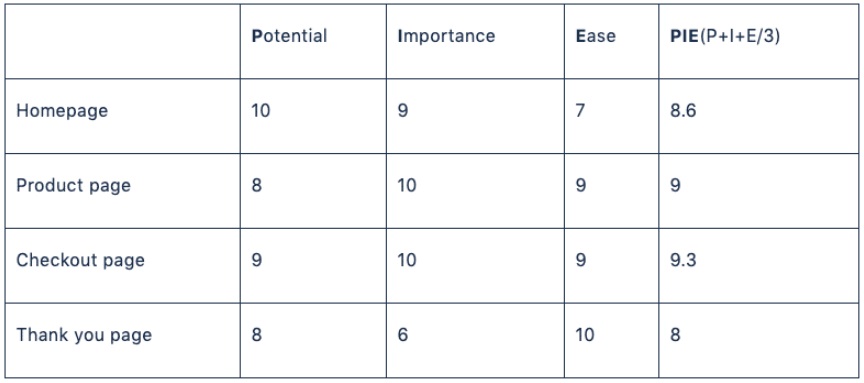

The PIE structure or framework discusses three rules that you need to consider while picking what to test when: importance, potential, and ease. In your A/B testing procedure the entire point of the prioritization stage is to discover the response to questions posed by customers such as, “Where would it be advisable for me to test first?”.

If organizations pick one tool from the toolbox (even if it were to be the least expensive one), and then begin A/B testing on each item present in the backlog, it would lead to no statistically significant conclusion. To begin with, testing without prioritization will come up short and not provide you with any business benefits. Secondly, not all tools are of similar quality. Prioritization can help you figure out a lot of things about your backlog which can help you determine where you should be investing your time and limited resources so it may be towards a reasonable potential testing candidate. Tools tend to be an investment loss for businesses that fall into the trap of buying cheaper tools with a big backlog to not spend too much. Some tools have good qualitative and quantitative capacities and perform brilliantly while producing statistically significant results even if they’re a bit more expensive.

Backlog candidates need to be set apart by how difficult they are to test based on technical and affordable ease. Taking the case of an eCommerce business, you might need to test your thank you or rating page, your product page, your home page, and your checkout page, etc. You can evaluate every expected candidate as an opening or opportunity, based on the standards I’ve mentioned and pick who you believe is worthy.

Presently as indicated by the PIE structure, you may line these up and mark them based on potential, importance, and ease:

In the market, a huge segment of people does not have a committed optimization team as CRO and A/B testing is a relatively new concept to them. The people that do use them are restricted to a small bunch of individuals. Human resource is the place where an arranged optimization calendar proves to be useful. A small CRO team may be able to prioritize its limited resources or assets on high stake items with an efficient plan and backlog.

Marked out of 10 total points per criteria*

This is another famous conversion optimization model that helps you understand mobile and web experiences. With the help of prioritization, your A/B testing calendar will be ready for execution in the period of 6 to 12 months. It will give you enough time to prepare properly for the test and plan around the resources that you have available. Hence, the LIFT model would then help develop a good A/B test hypothesis.

These are the six factors to improve the page experience from visitors perspective:

A/B test is the third and most important stage of all. It helps you prioritize the backlog. Once you have all the data you require and hypotheses in line with your goals prioritized, then create variations and flag off the test. It needs to be ensured that you meet all the important requirements to get statistically significant results before closing or ending the test. For example, testing precise traffic, testing for the right duration, not testing too many elements, and so on.

This stage involves ‘reviewing.’ In this stage, you’ll learn from your past and current tests and then apply them in future tests. In addition to that, you may start by analyzing the data you have collected. This needs to be done after your tests have run for the right amount of time, and next, your team can analyze the results. It is possible that the first thing you might notice is that one of the many versions tested performed better than all the others and won.

There may be three results of your test:

The importance of your test results can be defined using tools such as the A/B test significance calculator. It should be noted that in case you don’t get your desired results, do not give up. A better approach would be to make improvements to this version and keep testing. You can start by retracing your steps one by one and carefully examining where you went wrong. After that, it is advisable to redo or fix your mistake and start again.

Keep the following points in mind as you scale your A/B test plan:

When attempting to increase the test frequency, you shouldn’t compromise on the overall conversion rate of your website. It would be difficult to test several elements at the same time, as then it will not be easy to determine which element had the greatest impact on the success or failure of the test. Additionally, If you want to test two or more important elements on the same webpage, you could separate the two from each other.

For instance, in the process of testing your ad’s landing pages, you may choose to focus on testing the CTA to add an increase to your subscriptions and banners to experience a decrease in the bounce rate and increase the time spent. Next, you decide to change the copy based on your information for the CTA. Then, you choose to test a video with a static image for the banner. Now assuming you decide to do both tests at the same time, and in the end, you achieved both goals. The problem with this would be that the data showed that while subscriptions increased from the new CTA implementation, video (in addition to reducing the bounce rate and increasing the average time spent on the page) also contributed to it. Most of the people that saw the video also signed it. Knowing which element contributed the most to the increase in registrations and signups will be the hardest problem to solve now because you failed to space the two tests. Essentially, this is why better planning goes a long way. If your process was thought out carefully, then both of your tests could result in more meaningful insights.

Having a prioritized schedule gives your optimization team a clear idea of what to test and which test to run. It also gives you an idea about when the test should be run. All successful and failed campaigns should be double-checked after you’ve tested most of the items on your to-do list. Next, you may analyze the test results and determine if enough data indicates that you could run another If that is the case, then you may decide to re-run the test consisting of any necessary changes.

Your optimization team needs to plan this so that none of the tests affect others or your website’s performance. Your testing frequency is critical to the scope of your testing program. Therefore, one way to conduct this by running different websites at the same time. Another way to do this would be to run your website or test items from the same website at different times. This is because not only will this increase the frequency of the tests, but none of the tests will affect the others. An example could be that after completing the current test, you can test one item on each checkout page, the home page, and so on as well.

A/B testing programs should be scaled, and to do that, multiple metrics must be tracked to achieve more benefits and advantages using minimum effort. It is important to know that the best variation may affect other website goals as well. Typically, you might be used to measuring the performance of an A/B test against a single conversion goal and then relying on that goal to determine the most profiting variance. Relating to the examples I’ve previously discussed, the bounce rate may be reduced, and time spent may increase. In addition to that, the video also contributed to the increase in signups.

In terms of the use of A/B testing for a user’s optimization needs, in the traveling industry, Booking.com without difficulty surpasses all different eCommerce organizations. Booking.com has dealt with A/B testing as the process that introduces a flywheel impact for sales, and it has had a rigorous testing method since the day it began. The rate at which Booking.com runs A/B testing assessments is unmatched. They test their copies much more times and much more efficiently than anyone.

Although Booking.com has been implementing A/B testing services for longer than a decade now, they are still moving thoroughly and looking for strategies to enhance user experience. Nearly one thousand A/B tests are currently in process on Booking.com’s website, and these consistent efforts are the reason why A/B testing wins the game. This scenario shows us how hard Booking.com works to optimize customer engagement and user experience in their website. Goals such as increasing your sales from ancillary purchases or the range of hit bookings to your internet site/mobile applications, along with much more can be done using A/B testing for your company. Furthermore, you can also try checking out your homepage search modals, ancillary product presentation, search results web page, your checkout development bar, and so on.

Booking.com is determined to expand its reach in 2017 by selling rental properties for vacationing purposes. Booking.com has integrated A/B testing ever since the company started into their everyday work progress, which is why they have multiplied their testing velocity to its current rate through disposing of HiPPOs and prioritizing records and data. Additionally, Booking.com’s personnel have been allowed to run assessments on ideas they believe may help develop the business, giving the employees freedom to create and invent new strategies. Furthermore, this led to Booking.com partnering with Outbrain, local marketing, and advertising platform to develop their worldwide assets owner registration.

Within the initial few days of the launch, the group at Booking.com found out that despite the fact that many asset owners finished the primary signup step, they got stuck up in the next steps. At this time, pages created for the paid search task in their local campaigns had been used for the signup process. Three teams created three versions of the landing web page copy for Booking.com after working hard collectively. Additional information like social proof, recognitions, and personal rewards, other awards, etc., were added to the variations.

The final result was that the test ran for two weeks and produced a 25% increase in owner registration. Unfortunately, the consequences of the test results confirmed a decline in the price of each registration.

To achieve primary goals, marketers ensure to add relevant content on their websites, conduct webinars, show ads to the potential buyers, put special offers or sales, and other attention-grabbing schemes. Mainly, your goal must be to generate top-notch leads for your sales team, carry out relevant actions through practicing and testing vital factors of their demand generation engine, or in simple words, attract targeted buyers. To enhance user experience and bring an increase in conversions is the main objective of SaaS A/B testing. Nonetheless, your entire attempt could go to waste if the landing web page that visitors are directed to is not optimized fully or completely updated for the best user experience.

A leading SaaS-based fine dining restaurant platform, POSist, with more than 5,000 clients at over one hundred places throughout six countries, wanted to experience an increase in demo requests. The team at POSist wanted to decrease drop-off on the website’s homepage and contact us page, so they created versions of the homepage and contact us web page to obtain this.

Their homepage and contact us web page are the essential pages of their funnels. Conducting a test on your free trial signup flow, lead form element, social proof on the homepage, homepage messaging, CTA text, and so much more can be tried.

This is what the control seemed like after the adjustments were made to the homepage:

POSist A/B test control version

It was hypothesized by the team at POSist that adding more essentially relevant and conversion-focused content to the site will improve user experience and result in higher conversions. Hence, they made two variations to be tested against the control.

This is what the variations looked like:

POSist A/B test Variation 1 Version

POSist A/B test Variation 2 Version

Variation 1 was first tested by control, and the winner was variation 1. To improve the page, variation 1 was tested against variation 2, and variation 2 turned out as the winner. This implementation of the new variation increased page visits by about 5%.

By optimizing your checkout funnel, reducing the cart abandonment rate, etc., through using A/ B testing, you can enable online shops and eCommerce companies to increase their average order value. You can also try to test based on how the shipping costs are displayed and how and when the feature of free shipping is highlighted. In addition to that, text and color adjustments on the checkout page in the eCommerce industry can also be tested. 1-Click ordering was introduced in the late 1990s after detailed testing and analysis. 1-Click ordering allows users to buy products without using the shopping cart in the purchase section. In the e-commerce industry especially, “1-click ordering” has been the most productive feature that they offer to gain customer attraction.

Because of Amazon’s size, and mainly because of its immense commitment to delivering the best customer experience, Amazon is the best at conversion optimization. Card details and delivery address can be entered with just a click of the button and then waiting for the products you ordered to be delivered right to your home. Apple bought a license to use it in their online store in 2000 to see the commercial value it carried. With 1-click ordering, it became impossible for users to ignore the simplicity of the purchase and move to another store, and this was ground-breaking. This innovation had such a commercial impact that Amazon patented it in 1999 (now expired).

After following an ongoing process, by forming structured A/B testing, Amazon delivers the kind of user experience that it does. It takes immense hard work and a rigorous testing process to come up with good ideas. The first test is conducted on the target audience to see if the change would be beneficial, and then it is implemented. Each element is optimized fully and tries to meet the expectations of the audience.

By using user information and website data that has been gathered, each step is simplified to its maximum potential by Amazon in order to meet the requirements and expectations of its users. All pages from the homepage to the checkout page only contain the essentials. If you’ve noticed Amazon’s purchase funnel, then you must have seen that it hardly replicates other website’s purchase funnel, which makes it completely optimized and as per the user expectations.

At the top right of the Amazon home page is a small shopping cart icon that remains visible regardless of which page of the website you are on; this is something that is commonly noticed. All of this is achieved with one weapon and takes you towards success using A/B testing. The fascinating thing about the icon present there is that it doesn’t simply serve as a reminder of added products; it has more features to it. The current version offers the following five additional features;

The cognitive load is reduced for the visitor by having the option to click the small icon that has so many choices, and it provides a great user experience. Furthermore, products are also suggested for you on the same shopping cart page similar to your interest. It engages the user further and helps them find a product of their choice eventually.

Netflix users can confirm their streaming experience and can vouch for it; however, not everyone knows how Netflix has made their user experience so good. Netflix follows a detailed, structured A/B testing plan to deliver a seamless, engaging user experience that other companies have struggled to achieve despite their intense efforts. As I mentioned above, the secret to it is that every change that Netflix makes to its website goes through a deep analysis before the launch and is implemented after an intensive A/B test.

Any media and publishing industry would want to increase the number of readers and audiences, increase the time visitors spend on their website, increase subscriptions, and boost video views and other content with social sharing. You can try different types of email signup methods, buttons for sharing social networks, recommended content, highlight subscription offers, and other promotional engagement opportunities.

Based on each user’s profile, Netflix personalizes its homepage extensively to give each user the best experience. Netflix catered to their audience by deciding how many rows go into the homepage and which movies/shows go into the rows based on the user stream by analyzing the user’s Netflix history and settings.

They follow the same exercise with the media front or title pages as well. That means Netflix personalizes which titles are most likely to be displayed, which title text makes us click, which thumbnails are included, or which social proof makes our decision easier and quicker. Their strategy is what led them to become a giant in their industry.

Do you need professional help with A/B testing? Then you can email me at info@krcmic.com, call at +420 722 829 579 or use the form below. Would you like to expand your business network? Then don't forget to follow me on LinkedIn, Facebook, Instagram and Twitter.

In my digital marketing glossary there are defined digital marketing terms to help you understand the industry terms and broaden your knowledge over the various digital marketing topics.

for small and big clients all over the world.

in digital business.

from clients.

I’ve cooperated with Michal on numerous projects, related to SEO incl. content creation, web development, UX/UI design, online graphics, and web analysis. Our projects were always delivered on time and with great quality. What I appreciate about working with Michal the most is his business drive, mentor-like approach, flexibility, and honesty. Michal won’t do pointless work for you, he will help you choose and execute the right approach, instead.

We hired Michal as a digital consultant when were creating a new company website. He helped us put together a basic idea of how the new site should work in term of UX, prepared very detailed wireframes, provided the content and coordinated our external teams. I appreciate his professional approach, his great communication skills and proactivity. After the successful website launch, we contacted Michal several times and this resulted in a long-term cooperation.

Michal is extremely hardworking and purposeful. Somehow I suspect that it has not happened yet that he did not achieve what he intended. If you need to realize something, then contact Michal. He is your Man.

Michal is very hardworking manager with an excellent instinct for business. I got to know him as a courageous and decisive person and it does make a lot of fun to work and to develop new ideas and business opportunities with him . Besides that he is a cool guy to have a beer with...

Having worked with Michal for nearly two years, I can say he is an empathetic mentor, an indispensable collaborator, a fluid manager, a supportive colleague, and funny guy. Michal does everything with a smile. It is a great pleasure working with him.

I had an opportunity to cooperate with Michal on several client's projects. Michal is a self organized, target driven and customer oriented individual with huge knowledge and experience with online marketing. I can only recommend Michal for any company.

After our introductory gathering, Michal and I decided to sit down together in person. During the initial months of administrating the joint-cooperation, I truly admired his perspective towards work ethics and aims. He is one of the most competent content marketing specialists. I am truly motivated by the leadership behavior he displays towards his team and his endeavors towards exploring better strategies for corrective measures.

I have worked with Michal since 2012 and have always had great results from him. He is a dependable, hard working individual. He understands the importance of his work and the impact it has on the business and knows what does and does not work. He is specialized in analytics field and extremely knowledgeable about the online space in every aspect. And you do not find many "SEO guys" who try to really care about the content not just about some rubbish magical metrics:)